Microsoft AI Drops rStar2-Agent, a 14B Math Powerhouse

Microsoft has unveiled the rStar2-Agent, a lean 14B parameter model that’s redefining how artificial intelligence handles math. Instead of following the trend of "thinking longer" with endless reasoning chains, this model takes a smarter route: agentic reinforcement learning, using tools like Python execution to refine solutions step by step. The kicker? It’s outperforming colossal 671B parameter models while being faster, leaner, and more efficient.

The Problem with "Thinking Longer"

For years, large language models have tackled tough math by simply extending their Chain-of-Thought (CoT) reasoning. In plain English, they just think longer. Sure, this works sometimes, but it’s also a slippery slope.

Why? Because if the model makes a mistake early in its reasoning, that mistake snowballs through the chain. Instead of catching the error, the model confidently builds on it—leading to wrong answers.

This is where Microsoft’s rStar2-Agent flips the script. Rather than piling on more reasoning steps, it teaches AI to think smarter, mirroring the way real mathematicians double-check their work with tools.

The Agentic Approach: Thinking Smarter, Not Longer

So what’s the big idea behind rStar2-Agent?

Instead of just reasoning internally, the model interacts with Python environments to:

Write snippets of code

Run them

Analyze results

Adjust its reasoning based on actual output

Think of it as having a built-in lab assistant. The AI can propose an idea, test it, and refine it. Just like a mathematician who quickly runs calculations on a computer to verify intuition, rStar2-Agent grounds its reasoning in executable feedback.

This "agentic reinforcement learning" setup makes problem-solving dynamic and interactive—a big leap from static text-only reasoning.

Infrastructure Breakthroughs: Scaling the Impossible

Training an agentic model isn’t as simple as plugging in GPUs and hitting "go." When the AI writes and executes code during training, the system must handle tens of thousands of tool calls at once. That’s a nightmare for computational efficiency.

Microsoft cracked the challenge with two major infrastructure innovations:

Distributed Code Execution Service: Manages up to 45,000 concurrent calls with sub-second response times.

Dynamic Rollout Scheduler: Balances workloads across GPUs by monitoring cache availability in real time.

Thanks to these breakthroughs, training wrapped up in just one week on 64 AMD MI300X GPUs—proof that you don’t need a monster data center to achieve frontier-level AI reasoning.

GRPO-RoC: Smarter Learning from Mistakes

The magic behind rStar2-Agent isn’t just hardware. It’s also the GRPO-RoC algorithm—short for Group Relative Policy Optimization with Resampling on Correct.

Here’s how it works:

Oversampling: The system generates lots of reasoning traces.

Filtering: It weeds out messy tool usage and formatting errors, keeping the cleanest, most logical solutions.

Diversity: It still learns from failures, ensuring exposure to different error patterns.

This balance helps the model learn from the best without losing the lessons hidden in mistakes. The result? Shorter, sharper reasoning traces—around 10K tokens, compared to the 17K+ tokens of other models.

Training Strategy: From Simple to Complex

Microsoft didn’t just throw hard problems at the model from the start. Instead, training followed a stepwise strategy:

Stage 1 – Keep it Simple

8K token limit

Focus on clean instruction-following and tool formatting

Performance shot from near-zero to 70%

Stage 2 – Scale Up

Expanded to 12K tokens

Introduced more complex reasoning

Stage 3 – Mastery Mode

Filtered out easy problems

Focused solely on competition-level math

This staged approach let the model build confidence first, then tackle harder challenges—just like how students learn math step by step.

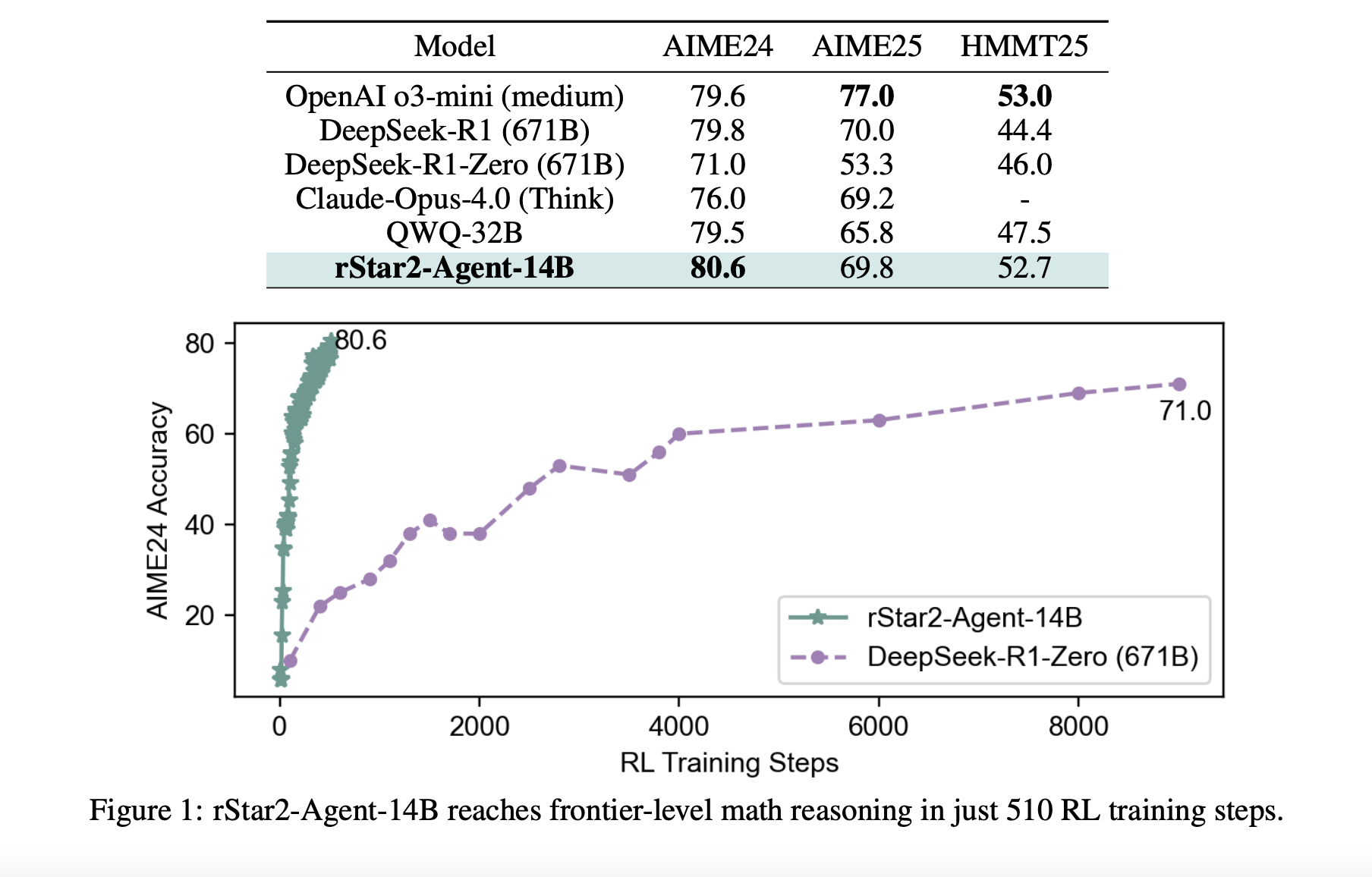

Breakthrough Results: Outperforming Giants

Here’s where jaws drop. Despite being just 14B parameters, rStar2-Agent scored:

80.6% on AIME24

69.8% on AIME25

To put that in perspective, it beat models with 671B parameters—that’s 47x larger.

Other highlights:

Uses ~10K tokens vs 17K+ for similar models

Shows strong transfer learning, excelling on scientific reasoning tasks despite only training on math

In short: smarter training > brute-force scaling.

Understanding the Mechanisms: Reflection Tokens

One fascinating finding was the emergence of reflection tokens.

Normally, models rely on "forking tokens" to explore different reasoning paths. But in rStar2-Agent, new tokens appear whenever the AI checks tool feedback. These tokens represent deeper reflection, where the model:

Reviews Python output

Diagnoses mistakes

Adjusts its reasoning on the fly

It’s almost like watching the AI think out loud while debugging itself.

Why This Matters: Toward Sustainable AI

The bigger picture here is sustainability. For years, AI progress has leaned on brute-force scaling—bigger datasets, bigger models, bigger costs. But rStar2-Agent proves you can reach frontier-level capabilities without inflating size endlessly.

The key takeaways:

Efficiency matters as much as raw power

Tool integration makes reasoning dynamic

Smarter training beats longer training

This could pave the way for AI systems that integrate seamlessly with multiple tools—not just generating text, but dynamically solving real-world problems.

Wrapping It Up

Microsoft’s rStar2-Agent is more than just another AI model—it’s a proof of concept that efficiency, tool integration, and smart training can outshine brute-force scaling. With its 80%+ accuracy on competition-level math and lean 14B size, it’s setting the stage for a new era of sustainable AI development.

Instead of endlessly "thinking longer," future AI will think smarter—and rStar2-Agent is leading that charge.

===================================================================

Master Generative AI in just 8 weeks with the GenAI Launchpad by Build Fast with AI.

Gain hands-on, project-based learning with 100+ tutorials, 30+ ready-to-use templates, and weekly live mentorship by Satvik Paramkusham (IIT Delhi alum).

No coding required—start building real-world AI solutions today.

👉 Enroll now: www.buildfastwithai.com/genai-course

⚡ Limited seats available!

===================================================================

Resources & Community

Join our vibrant community of 12,000+ AI enthusiasts and level up your AI skills—whether you're just starting or already building sophisticated systems. Explore hands-on learning with practical tutorials, open-source experiments, and real-world AI tools to understand, create, and deploy AI agents with confidence.

Website: www.buildfastwithai.com

GitHub (Gen-AI-Experiments): git.new/genai-experiments

LinkedIn: linkedin.com/company/build-fast-with-ai

Instagram: instagram.com/buildfastwithai

Twitter (X): x.com/satvikps

Telegram: t.me/BuildFastWithAI