Meta AI's DeepConf Hits 99.9% on AIME 2025 with Open-Source GPT-OSS-120B

Artificial intelligence is moving fast—but every so often, a breakthrough makes the entire industry stop and take notice. That’s exactly what just happened with Meta AI’s DeepConf, a revolutionary reasoning method that smashed records by hitting 99.9% accuracy on the AIME 2025 benchmark using the open-source GPT-OSS-120B model.

This isn’t just another performance milestone—it’s a paradigm shift. DeepConf finally cracks the long-standing dilemma between accuracy and computational efficiency in large language model (LLM) reasoning.

Let’s dive into what makes DeepConf so groundbreaking, how it works, and why it could change the way researchers, developers, and organizations deploy AI reasoning.

The Computational Dilemma in AI Reasoning

Large language models have gotten incredibly good at step-by-step reasoning. Popular techniques like parallel thinking (a.k.a. self-consistency with majority voting) work by generating multiple reasoning paths and picking the most common outcome.

Sounds smart, right? The problem is, this method comes with two major drawbacks:

Diminishing returns: Generating more traces doesn’t always improve accuracy. In fact, low-quality reasoning paths often dilute results.

Skyrocketing costs: Producing hundreds—or thousands—of reasoning traces per query quickly becomes computationally expensive.

For years, this accuracy vs. efficiency trade-off has slowed progress. To boost accuracy, you had to burn more compute. To save resources, you had to accept lower accuracy.

DeepConf changes the game by cutting through that compromise.

Introducing DeepConf: Confidence as Intelligence

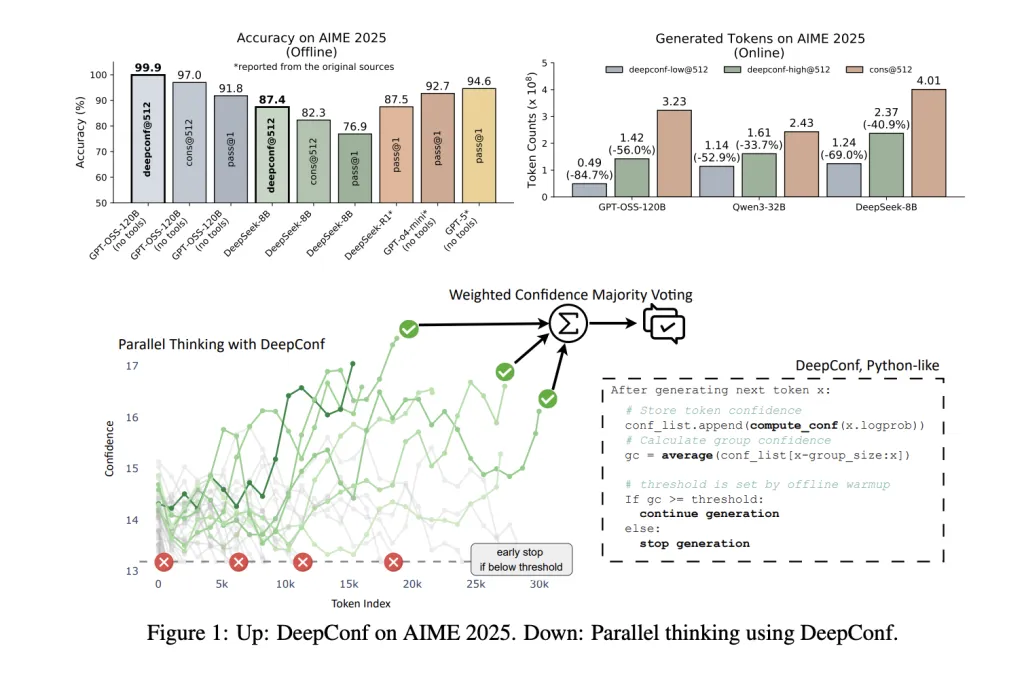

At its core, DeepConf (Deep Think with Confidence) is elegantly simple: instead of treating every reasoning path equally, it listens to the model’s own confidence signals.

Rather than blindly generating endless traces, DeepConf filters out low-confidence reasoning paths and focuses only on the most reliable ones. It does this dynamically—either during generation (online mode) or afterward (offline mode).

The result? Peak accuracy with far fewer tokens generated.

Meta AI reports that DeepConf delivers:

99.9% accuracy on AIME 2025

Up to 85% fewer tokens than traditional methods

Universal compatibility with any LLM—no retraining required

That’s a staggering leap forward.

The Technical Breakthrough: How DeepConf Works

DeepConf’s secret sauce is its sophisticated confidence metrics. Instead of relying on just one measure, it layers multiple signals to catch weaknesses in reasoning.

Advanced Confidence Metrics

Token Confidence: Calculates certainty at each token step based on log-probabilities.

Group Confidence: Smooths confidence over a window of tokens to track reasoning quality.

Tail Confidence: Focuses on the last part of the reasoning (where answers usually appear).

Lowest Group Confidence: Detects the weakest segments in a trace—often where logic collapses.

Bottom Percentile Confidence: Highlights the most error-prone sections.

Dynamic Filtering and Weighting

Once confidence is measured, DeepConf uses two tricks:

Vote Weighting – Give more influence to high-confidence reasoning traces.

Trace Filtering – Retain only the top-performing traces (e.g., top η%).

In online mode, DeepConf can even stop generating a trace early if its confidence dips below a threshold—saving compute without sacrificing accuracy.

Record-Breaking Performance

DeepConf has been rigorously tested on multiple benchmarks and models, and the numbers are jaw-dropping.

AIME 2025 Highlights (with GPT-OSS-120B):

Pass@1 Accuracy: 91.8%

Self-Consistency @512: 97.0%

DeepConf @512: 99.9%

Tokens Saved: -84.7%

Other Models:

DeepSeek-8B (AIME 2024) – Jumped from 86.7% → 93.3% with 77.9% fewer tokens.

Qwen3-32B (AIME 2024) – Boosted from 85.3% → 90.8% with 56% fewer tokens.

👉 In plain English: DeepConf doesn’t just boost accuracy, it slashes resource usage at the same time.

Maximum Impact, Minimal Code

One of the most impressive aspects of DeepConf is how easy it is to implement.

For frameworks like vLLM, integration takes roughly 50 lines of code. All you need to do is:

Extend the logprobs processor to track sliding-window confidence.

Add an early-stop check during generation.

Pass confidence thresholds via API.

No retraining. No hyperparameter tuning. No architecture modifications. Just plug-and-play efficiency.

Real-World Impact and Applications

DeepConf isn’t just an academic curiosity—it’s immediately useful across industries.

Research and Academia

Enhanced math problem-solving.

Faster, cheaper computation for experiments.

Accelerated discovery cycles.

Education

Smarter AI tutors with fewer errors.

More reliable automated grading.

Personalized learning at scale.

Industry

Cost-effective AI deployment.

Financial modeling with fewer compute demands.

Reliable decision-support systems.

In all these domains, DeepConf lowers barriers to advanced AI reasoning by making it efficient, affordable, and accessible.

Expert Insights and Limitations

Experts are calling DeepConf a paradigm shift—proof that AI can maintain peak accuracy without brute-force token generation.

That said, some caution is warranted. Confidence filtering isn’t foolproof:

Overconfidence risk – Sometimes models can be highly confident yet still wrong.

Deployment sensitivity – Conservative thresholds may be needed in safety-critical use cases.

Still, the benefits far outweigh the risks, especially for research and enterprise applications.

Future Outlook

DeepConf is likely just the beginning of a new era in reasoning optimization. Here’s what’s coming next:

Broader Adoption: Confidence-based reasoning will spread to financial tools, scientific models, and education platforms.

Refinements: Researchers will improve filtering to reduce rare failure cases.

New Standards: Expect confidence-driven methods to become benchmarks for AI efficiency.

And because the project is open-source, even smaller labs and startups can immediately tap into world-class reasoning performance.

The Open-Source Advantage

Meta AI didn’t just announce DeepConf—they made it available to everyone. With the implementation on GitHub, developers can:

Clone, test, and deploy DeepConf in hours.

Contribute improvements.

Build new applications without massive compute budgets.

This democratization could accelerate progress across the entire AI ecosystem.

Wrapping It Up

Meta AI’s DeepConf is more than a technical trick—it’s a breakthrough that solves one of the toughest challenges in AI reasoning. By reaching 99.9% accuracy on AIME 2025 while cutting token usage by up to 85%, it redefines what’s possible.

For researchers: It means faster, cheaper experiments.

For educators: Smarter and more reliable AI tutors.

For enterprises: Lower costs without compromising quality.

And for the AI field as a whole, it signals a new era where accuracy and efficiency finally go hand in hand.

===================================================================

Master Generative AI in just 8 weeks with the GenAI Launchpad by Build Fast with AI.

Gain hands-on, project-based learning with 100+ tutorials, 30+ ready-to-use templates, and weekly live mentorship by Satvik Paramkusham (IIT Delhi alum).

No coding required—start building real-world AI solutions today.

👉 Enroll now: www.buildfastwithai.com/genai-course

⚡ Limited seats available!

===================================================================

Resources & Community

Join our vibrant community of 12,000+ AI enthusiasts and level up your AI skills—whether you're just starting or already building sophisticated systems. Explore hands-on learning with practical tutorials, open-source experiments, and real-world AI tools to understand, create, and deploy AI agents with confidence.

Website: www.buildfastwithai.com

GitHub (Gen-AI-Experiments): git.new/genai-experiments

LinkedIn: linkedin.com/company/build-fast-with-ai

Instagram: instagram.com/buildfastwithai

Twitter (X): x.com/satvikps

Telegram: t.me/BuildFastWithAI