Are you waiting for the future or creating it?

Be a part of Gen AI Launch Pad 2024 and take charge of what’s next. Act today for a better tomorrow.

Introduction

Welcome to this step-by-step guide on integrating multiple Large Language Models (LLMs) seamlessly using LiteLLM. Whether you're working with OpenAI's models or other providers like Google or Anthropic, LiteLLM simplifies your workflow with a unified API. By the end of this post, you'll learn how to:

- Set up LiteLLM.

- Use LiteLLM to interact with models like GPT-4.

- Integrate LiteLLM with LangChain for more advanced AI-powered applications.

We'll provide code examples, detailed explanations, and potential real-world applications to help you get the most out of this powerful tool.

1. Introduction to LiteLLM

LiteLLM is designed to make accessing LLMs easier by providing:

- Unified API for multiple providers (OpenAI, Google, Anthropic, etc.).

- Support for 50+ models.

- Features like load balancing, cost tracking, and streaming responses.

Key Benefits of LiteLLM:

- Simplifies integration with different LLM providers.

- Reduces the complexity of managing multiple APIs.

- Ideal for scalable and efficient LLM-based applications.

2. Setup and Installation

To get started, you'll need to install litellm and a few additional libraries for extended functionality:

!pip install litellm langchain langchain_community

These libraries include:

- LiteLLM: For simplified LLM integration.

- LangChain: For building chains of LLM-powered tools.

- LangChain Community: Additional integrations and utilities for LangChain.

3. Setting Your API Keys

To authenticate with providers like OpenAI, you'll need to set your API keys.

If you're using Google Colab, you can set environment variables like this:

from google.colab import userdata

import os

os.environ['OPENAI_API_KEY'] = userdata.get('OPENAI_API_KEY')

OPENAIKEY = os.getenv('OPENAI_API_KEY')

This ensures your API keys are stored securely while running the code.

4. Calling OpenAI Models with LiteLLM

Let's make a simple call to OpenAI's GPT-4 using LiteLLM:

import openai

import litellm

response = litellm.completion(

model="gpt-4o",

messages=[{"content": "What's the weather in SF?", "role": "user"}]

)

# Print the response

print(response)

Expected Output:

You'll get a response from the model like:

{'choices': [{'message': {'content': "I'm sorry, but I don't have real-time weather data."}}]}

Explanation:

litellm.completion(): Simplifies the process of interacting with LLMs.- The

modelparameter specifies the model to use (e.g.,"gpt-4o"). - The

messagesparameter follows the chat format used by OpenAI's API.

Real-World Use Case:

- Building chatbots that respond to user queries.

- Automating tasks like customer support or information retrieval.

5. Integrating LiteLLM with LangChain

LangChain helps create more complex AI-powered applications by chaining different tools and models together.

Here's an example of using LiteLLM with LangChain:

import os

from langchain.chat_models import ChatLiteLLM

from langchain.prompts.chat import (

ChatPromptTemplate,

SystemMessagePromptTemplate,

HumanMessagePromptTemplate,

)

from langchain.schema import AIMessage, HumanMessage

chat = ChatLiteLLM(model="gpt-4o")

messages = [

HumanMessage(content="What model are you?")

]

response = chat(messages)

print(response)

Expected Output:

AIMessage(content="I am a GPT-4 model.")

Explanation:

ChatLiteLLM: A LangChain wrapper for LiteLLM.- Prompt Templates: Allow you to structure your conversations more effectively.

- Useful for applications where conversations need to be dynamically managed.

Real-World Use Case:

- Creating intelligent agents that maintain context across conversations.

- Building applications that require complex prompting logic.

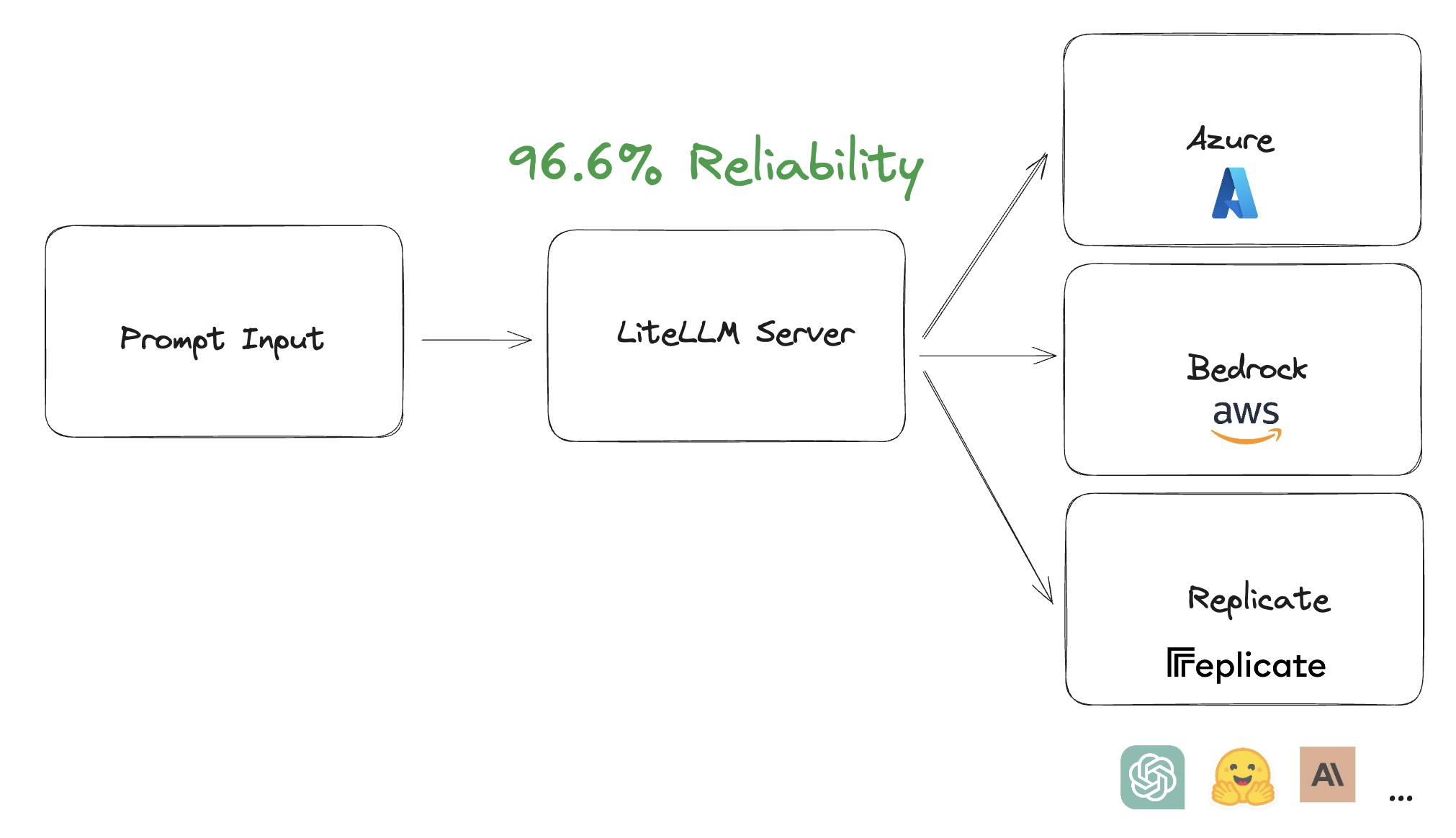

6. Visual Aids and Diagrams

To improve understanding, consider including the following visual aids:

- Flowchart illustrating how LiteLLM interacts with different LLM providers.

- Screenshots of example outputs from running the code.

- Diagrams showing how LangChain integrates with LiteLLM for advanced workflows.

Conclusion

LiteLLM is a powerful tool that simplifies working with various large language models. By integrating LiteLLM with LangChain, you can build scalable, efficient, and complex AI applications with ease.

Key Takeaways:

- LiteLLM unifies access to multiple LLM providers.

- Setting up LiteLLM is straightforward, and it works well with LangChain.

- You can create chatbots, intelligent agents, and more with just a few lines of code.

Resources

- LiteLLM GitHub: LiteLLM Repository

- LangChain Documentation: LangChain Docs

- OpenAI API Reference: OpenAI Docs

- LiteLLM: Simplified LLM Access Build Fast with AI : NoteBook

---------------------------------

Stay Updated:- Follow Build Fast with AI pages for all the latest AI updates and resources.

Experts predict 2025 will be the defining year for Gen AI implementation.Want to be ahead of the curve?

Join Build Fast with AI’s Gen AI Launch Pad 2025 - your accelerated path to mastering AI tools and building revolutionary applications.