Grok-4-Fast: xAI’s Unified Reasoning Model for Cost-Efficient AI

A New Era of Cost-Efficient Intelligence

In the race to build more powerful AI systems, cost and efficiency have become just as important as raw performance. xAI’s latest release, Grok-4-Fast, redefines what’s possible by combining frontier-level intelligence with breakthrough efficiency.

As the successor to Grok-4, this model introduces a unified reasoning architecture, a massive 2M token context window, and cutting-edge web + X search capabilities — all at a fraction of the cost of its competitors. For developers, startups, and enterprises alike, Grok-4-Fast makes high-quality reasoning faster, more affordable, and more accessible than ever.

Unified Architecture: One Model, Many Modes

Traditionally, AI developers had to choose between separate models for long-chain reasoning and quick responses. Grok-4-Fast eliminates that tradeoff by introducing a single unified weight space that supports both reasoning and non-reasoning modes.

With system prompts, users can steer the model toward fast, short-form answers or extended, multi-step reasoning — all without switching models. This reduces latency, complexity, and cost, making Grok-4-Fast ideal for search, Q&A, coding, and real-time assistants.

Key Features and Technical Specs

2,000,000-token context window: Handles entire repositories, long documents, and complex multi-turn conversations.

Tool-use reinforcement learning: Trained end-to-end to decide when to browse the web, execute code, or call external APIs.

Search excellence: Seamlessly integrates web + X, capable of hopping links, ingesting images/videos, and synthesizing results in real time.

Two SKUs, one architecture: Developers can choose between reasoning and non-reasoning modes — both with full 2M context.

For real-world workflows, this means smoother development, smarter research, and faster automation.

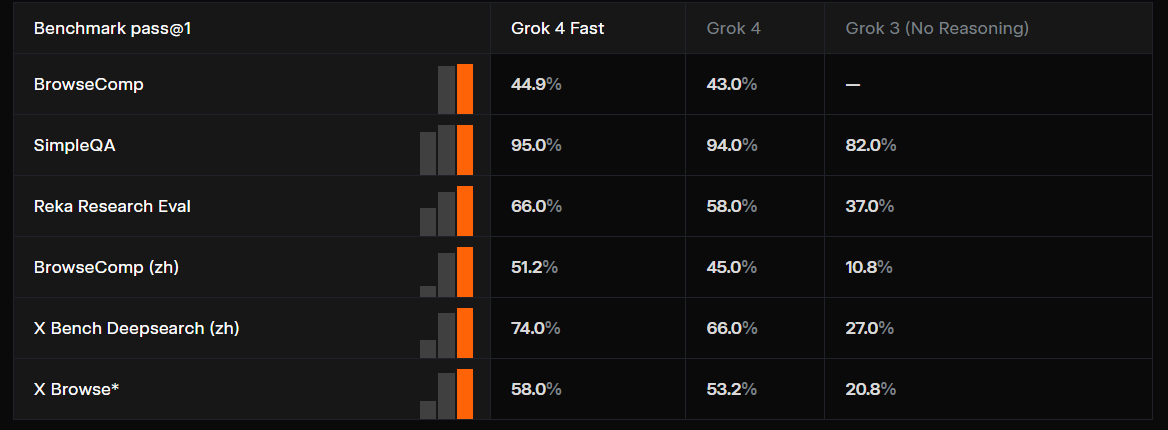

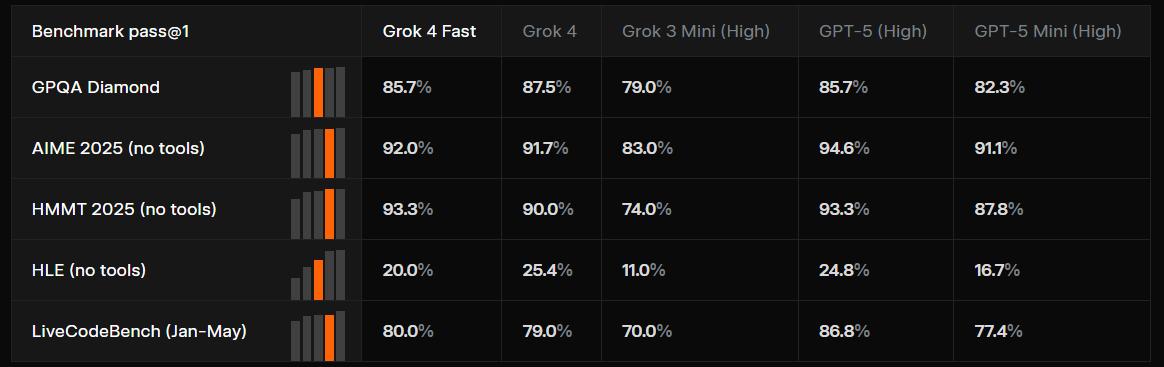

Benchmark Performance and Efficiency Gains

Despite being optimized for speed and cost, Grok-4-Fast posts frontier-class benchmark scores:

AIME 2025: 92.0% (close to GPT-5 at 94.6%)

HMMT 2025: 93.3% (matching GPT-5, outperforming Grok-4)

GPQA Diamond: 85.7% (on par with GPT-5)

But the real breakthrough lies in efficiency:

Uses 40% fewer thinking tokens than Grok-4.

Achieves 98% cost reduction for the same performance.

Independent analysis confirms a best-in-class price-to-intelligence ratio.

For businesses scaling AI, this translates to frontier performance at startup-friendly costs.

Pricing: Breaking the Barrier

xAI has introduced a radically affordable pricing structure:

Input tokens (<128k): $0.20 per 1M

Output tokens (<128k): $0.50 per 1M

Cached input tokens: $0.05 per 1M

Compared to GPT-5 High ($1.25–$10.00 per 1M) and Claude Sonnet 4 ($3.00–$15.00 per 1M), Grok-4-Fast operates up to 47x cheaper.

This opens frontier AI to startups, researchers, and individual developers who previously couldn’t afford it.

Developer Impact: Speed + Flow State

For developers, Grok-4-Fast isn’t just cheaper — it’s faster and more fluid.

92 tokens/sec throughput enables real-time coding assistance.

Built with a 314B parameter MoE architecture optimized for agentic coding workflows.

Cache hit rates above 90% ensure snappy iterations.

Visible reasoning traces let developers understand how the model thinks.

As one early adopter put it:

“It’s fast enough to keep me in flow state — I don’t have time to context switch.”

Industry Implications and Accessibility

Grok-4-Fast is already shaking up the AI landscape:

Startups: Can scale without ballooning costs.

Researchers: Gain affordable access to frontier-level models.

Enterprises: Cut compute costs while maintaining quality.

Developers: Integrate real-time AI into coding and automation workflows.

It’s available today via grok.com, mobile apps, xAI API, OpenRouter, and Vercel AI Gateway, with free access offered to all users for the first time.

The New Paradigm of Unified Intelligence

With Grok-4-Fast, xAI proves that reasoning power and efficiency no longer need to be separate goals. The unified architecture not only slashes costs but also simplifies deployment and enhances user experience.

For the AI industry, this marks a turning point:

No tradeoff between speed and intelligence.

No barriers to entry for frontier AI.

A new standard for cost-efficient, deployable intelligence.

As AI continues to evolve, Grok-4-Fast sets the stage for a future where every developer, researcher, and business can access high-performance reasoning at scale.

===================================================================

Master Generative AI in just 8 weeks with the GenAI Launchpad by Build Fast with AI.

Gain hands-on, project-based learning with 100+ tutorials, 30+ ready-to-use templates, and weekly live mentorship by Satvik Paramkusham (IIT Delhi alum).

No coding required—start building real-world AI solutions today.

👉 Enroll now: www.buildfastwithai.com/genai-course

⚡ Limited seats available!

===================================================================

Resources & Community

Join our vibrant community of 12,000+ AI enthusiasts and level up your AI skills—whether you're just starting or already building sophisticated systems. Explore hands-on learning with practical tutorials, open-source experiments, and real-world AI tools to understand, create, and deploy AI agents with confidence.

Website: www.buildfastwithai.com

GitHub (Gen-AI-Experiments): git.new/genai-experiments

LinkedIn: linkedin.com/company/build-fast-with-ai

Instagram: instagram.com/buildfastwithai

Twitter (X): x.com/satvikps

Telegram: t.me/BuildFastWithAI