GPT-5.3-Codex vs Claude Opus 4.6 vs Kimi K2.5: The AI Coding War Nobody Expected

On February 5, 2026, at approximately 10:00 AM Pacific Time, Anthropic launched Claude Opus 4.6. For about twenty minutes, it was the undisputed state-of-the-art coding model in the world.

Then OpenAI dropped GPT-5.3-Codex. Twenty minutes later. Whether it was planned counter-programming or a reactive launch is still debated — OpenAI hasn't commented on the timing, and Anthropic CEO Dario Amodei just gave a "tight-lipped smile" when asked about it at a press event.

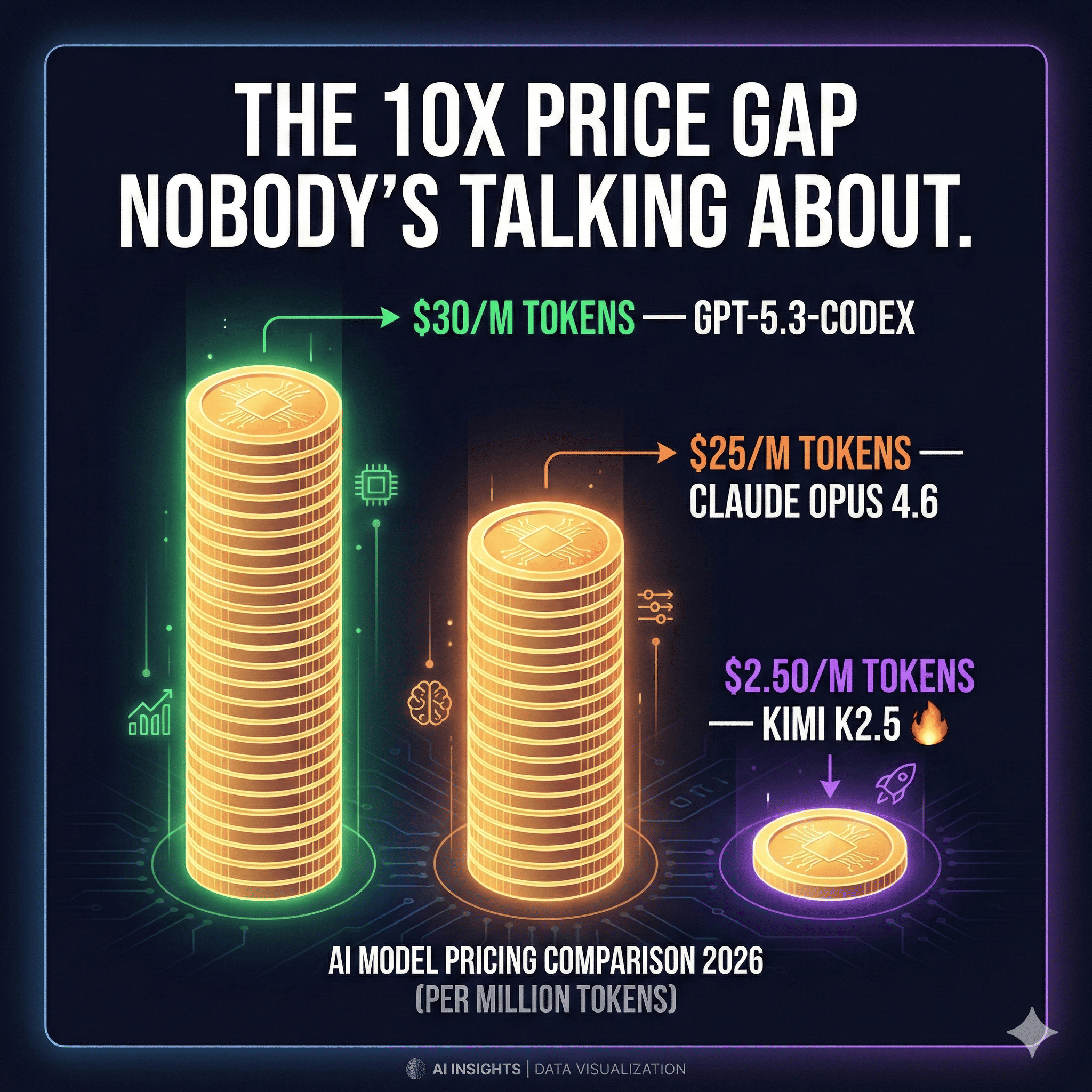

And while Silicon Valley was processing that drama, Moonshot AI's Kimi K2.5 — released just nine days earlier on January 27 — was quietly offering comparable coding performance at 10× lower API pricing than either competitor.

I've spent the past two weeks testing all three, reading every benchmark paper, and talking to developers who've shipped production code with each. Here's what I've found — and honestly, the "which one wins" answer is more complicated than any headline will tell you.

Table of Contents

The February 5 Showdown: What Actually Happened

Head-to-Head Benchmarks: The Numbers That Matter

GPT-5.3-Codex: The Terminal Terminator

Claude Opus 4.6: The Enterprise Brain

Kimi K2.5: The Open-Source Wildcard

Pricing Breakdown: Where Your Money Actually Goes

Which Model Should You Actually Use?

The Bigger Story: What This Competition Means for Developers

FAQ

The February 5 Showdown: What Actually Happened

Let me set the scene, because the timing tells you everything about the state of AI competition in 2026.

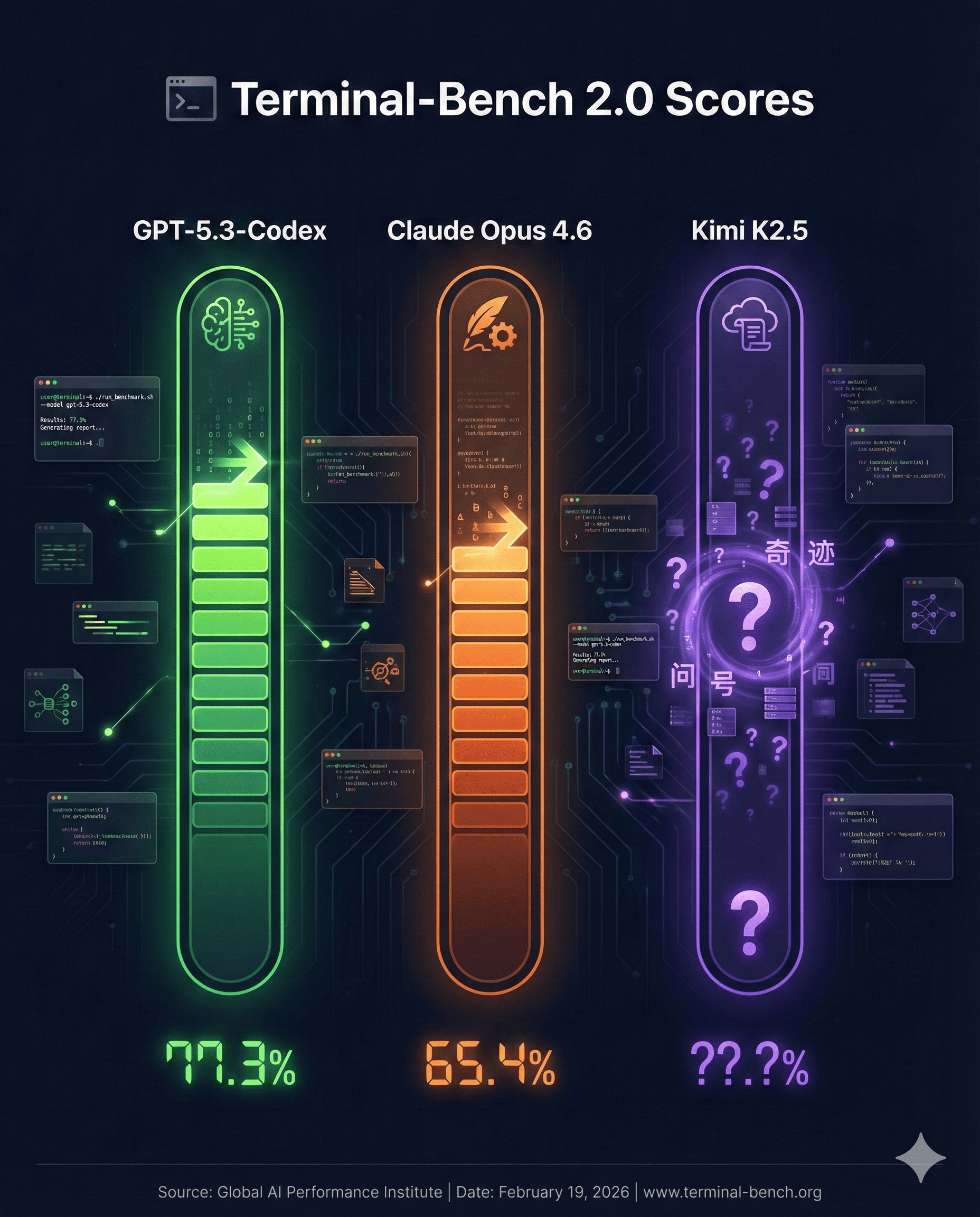

Anthropic published the Opus 4.6 blog post, API endpoints went live, and the social media blitz started rolling. For those twenty minutes, Opus 4.6 held the crown on Terminal-Bench 2.0 with a score of 65.4% — a meaningful jump from Opus 4.5's 59.8%. Developers on X were already praising the 1-million-token context window and the new Agent Teams feature.

Then OpenAI hit publish on GPT-5.3-Codex. Terminal-Bench 2.0? 77.3%. That's not a marginal difference — that's nearly 12 percentage points. One user on X described it as having "absolutely demolished" Opus 4.6 on that specific benchmark.

DataCamp's analysis confirmed the sequence: GPT-5.3-Codex dropped roughly 30 minutes after Opus 4.6, and it topped the benchmark Anthropic had just claimed to lead by over 5 percentage points.

What followed was chaotic. Developers scrambled to benchmark both models simultaneously. Tech Twitter split into camps. VentureBeat called it "the AI coding wars heating up." And the numbers from Andreessen Horowitz's enterprise survey released that same week added fuel: average enterprise LLM spending hit $7 million in 2025 — 180% higher than 2024 — and is projected to reach $11.6 million per enterprise in 2026. The coding AI market isn't theoretical anymore. It's a multi-billion-dollar fight.

Head-to-Head Benchmarks: The Numbers That Matter

I'm going to give you the actual verified numbers. Not marketing claims — benchmark scores confirmed by multiple independent sources as of February 2026.

BenchmarkGPT-5.3-CodexClaude Opus 4.6Kimi K2.5

SWE-Bench Verified N/A (not yet tested) 80.8% 76.8%

SWE-Bench Pro (Public)56.8% N/A (different benchmark) N/A

Terminal-Bench 2.0 77.3% 65.4% N/A

OSWorld-Verified 64.7% 72.7% N/A

GDPval-AA (knowledge work)~1462 Elo1606 Elo (+144 lead)N/A

ARC-AGI-2 (reasoning)~54.2% (GPT-5.2 Pro)68.8%N/A

Humanity's Last Exam50.0% (GPT-5.2 Pro)40.0%50.2%

Context window 400K tokens1M tokens (beta)256K tokens

API pricing (input/output)$10/$30 per M tokens$5/$25 per M tokens$0.60/$2.50 per M tokens

A few things jump out immediately.

SWE-Bench is essentially a dead heat at the top. Simon Willison noted just today (February 19) that the latest SWE-bench leaderboard update shows Claude Opus 4.5 at the top, followed by Gemini 3 Flash, with Opus 4.6 scoring 80.8% — and GPT-5.3-Codex isn't even represented yet because it's not available in the standard API. The top models are clustered within a single percentage point. I think we've hit a soft ceiling on this benchmark.

Terminal-Bench 2.0 is where OpenAI pulled ahead decisively. 77.3% vs 65.4% is not close. If your workflow involves heavy terminal-based agent tasks — deployment scripts, CI/CD debugging, infrastructure management — Codex has a meaningful advantage.

OSWorld tells the opposite story. Opus 4.6 scores 72.7% on agentic computer use versus Codex's 64.7%. If your use case involves GUI navigation, desktop automation, or visual-environment tasks, Opus wins.

GDPval-AA is Opus 4.6's strongest card. This benchmark measures real-world knowledge work across 44 professional occupations — finance, legal, analysis, documentation. Opus 4.6 beats GPT-5.2 by 144 Elo points. If your "coding" extends into financial modeling, legal document review, or business analysis alongside code, this advantage is substantial.

GPT-5.3-Codex: The Terminal Terminator

GPT-5.3-Codex is OpenAI's first model that combines its Codex coding stack with GPT-5.2's broader reasoning into a single system — and then runs 25% faster while using fewer tokens than its predecessor.

But the real headline is one that should make every AI researcher pause: GPT-5.3-Codex was instrumental in creating itself. Early versions debugged their own training runs, managed deployment infrastructure, diagnosed evaluation failures, and wrote scripts to dynamically scale GPU clusters during launch. OpenAI's engineering team reported being "blown away by how much Codex was able to accelerate its own development."

The New Stack called it "only a first step in having models build and improve themselves." I think that framing is correct and slightly terrifying.

Where Codex dominates:

Terminal-based agentic coding (77.3% Terminal-Bench — best in class by a wide margin)

Multi-file, multi-step task execution with long-horizon planning

Speed — 25% faster inference means lower latency for interactive pair programming

Token efficiency — fewer output tokens for equivalent results, which directly reduces API costs

Real-time steering — you can interact with Codex mid-task without losing context

Where Codex falls short:

Not yet available via standard API (rolling out in phases)

Classified as "High" cybersecurity capability under OpenAI's Preparedness Framework — which means phased access with safeguards

OSWorld (computer use) scores trail Opus 4.6 by 8 points

Enterprise knowledge work (GDPval-AA) significantly behind Opus 4.6

OpenAI also shipped GPT-5.3-Codex-Spark on February 12 — a smaller variant running on Cerebras' Wafer Scale Engine 3, delivering over 1,000 tokens per second. This signals a strategic split: Spark for real-time interactive coding, full Codex for deep multi-hour agentic work.

Claude Opus 4.6: The Enterprise Brain

If GPT-5.3-Codex is a coding specialist that got smarter, Claude Opus 4.6 is a general intelligence model that got better at coding. The distinction matters more than you'd think.

The 1 million token context window (beta) is Opus 4.6's killer feature for real-world engineering. That's roughly 750,000 words, or an entire enterprise codebase loaded into a single prompt. The previous 200K limit felt generous until you tried loading a Rails monolith into a conversation. Anthropic's MRCR v2 benchmark shows Opus 4.6 maintaining 76% accuracy at 1M tokens compared to a dismal 18.5% for GPT-5.2 at the same length. Context coherence over long sessions isn't just a nice-to-have — it's the difference between an AI that can review your whole codebase and one that forgets what file it was looking at.

Agent Teams is the other standout. This is multi-agent Claude Code orchestration — multiple sub-agents coordinating autonomously on different parts of a project. The demo that turned heads: 16 agents built a working C compiler from scratch — 100,000 lines of code that boots Linux on three CPU architectures. That's not a synthetic benchmark. That's functional software.

The ARC-AGI-2 result deserves special attention: 68.8%, nearly doubling Opus 4.5's 37.6%. This benchmark tests abstract reasoning and novel pattern recognition — the kind of fluid intelligence that has historically been AI's weakest domain. The entire field moved maybe 10 percentage points on ARC-AGI over the previous two years combined. Opus 4.6 jumped 31 points in a single generation.

Where Opus 4.6 dominates:

Enterprise knowledge work (GDPval-AA: 1606 Elo, 144 points above GPT-5.2)

Agentic computer use (OSWorld: 72.7% — best in class)

Long-context coherence (1M tokens with 76% accuracy vs competitors' collapse)

Abstract reasoning (ARC-AGI-2: 68.8%)

Financial analysis (#1 on Finance Agent benchmark)

Legal reasoning (90.2% on BigLaw Bench)

Where Opus 4.6 falls short:

Terminal-based coding is 12 points behind Codex (65.4% vs 77.3%)

SWE-bench Verified shows a tiny regression from Opus 4.5 (80.8% vs 80.9%)

Pricing is steeper than Codex ($5/$25 vs $10/$30 per million tokens)

Some users report flatter, more generic prose compared to Opus 4.5 — a real controversy in the writing community

Two days ago Anthropic also dropped Claude Sonnet 4.6 — which scores 79.6% on SWE-bench and 72.5% on OSWorld at $3/$15 per million tokens. That's near-Opus performance at 5× lower cost. If you're cost-sensitive, Sonnet 4.6 might be the actual winner here.

Suggested image alt text: Claude Opus 4.6 Agent Teams demo showing 16 sub-agents coordinating to build a C compiler from scratch Suggested filename: claude-opus-4-6-agent-teams-coding-demo-2026.jpg

Kimi K2.5: The Open-Source Wildcard

While OpenAI and Anthropic were throwing punches at each other, Moonshot AI's Kimi K2.5 quietly shipped on January 27, 2026 — and it's the one that could actually disrupt the economics of AI coding.

The architecture is massive: 1 trillion total parameters with a Mixture of Experts design that activates only 32 billion parameters per token. It supports a 256K context window, runs in four modes (Instant, Thinking, Agent, Agent Swarm), and is fully open-source under a modified MIT license. You can download the weights from Hugging Face and self-host.

The benchmark performance is genuinely strong. 76.8% on SWE-Bench Verified puts it within 4 points of Opus 4.6. On Humanity's Last Exam, Kimi K2.5 actually scores 50.2% — beating Opus 4.6's 40.0% and matching GPT-5.2 Pro. And the Codecademy guide on K2.5 reports it leads both GPT-5.2 and Claude on the agentic BrowseComp benchmark (74.9% vs 59.2% for GPT-5.2).

But the headline feature is Agent Swarm — a system where Kimi can self-direct up to 100 AI sub-agents working in parallel, each with independent tool access. Moonshot reports this reduces execution time by up to 4.5× for complex workflows compared to sequential single-agent approaches. The orchestrator agent is trained using Parallel-Agent Reinforcement Learning (PARL) that explicitly rewards early parallelism.

Where Kimi K2.5 dominates:

Price-to-performance ratio (10× cheaper than Claude, ~17× cheaper than Codex on input tokens)

Open-source flexibility — self-host, fine-tune, deploy on your own infrastructure

Parallel agent execution at scale (100 sub-agents vs Claude's 16)

Visual coding — best-in-class at turning UI mockups into functional code

Document understanding and OCR

Where Kimi K2.5 falls short:

Ecosystem maturity — 6,400 GitHub stars vs Claude Code's massive enterprise footprint

Western market presence — Moonshot AI is China-based (Alibaba-backed), with documentation and community stronger in Chinese

Agent Swarm is still a research preview, less battle-tested in production

No Terminal-Bench or OSWorld scores available for direct comparison

No native IDE extension equivalent to Codex or Claude Code (though Kimi Code CLI exists)

Here's my contrarian take: for teams spending $50K+ annually on AI coding APIs, Kimi K2.5 is the one that deserves the longest look. The 10× price advantage means you can run significantly more iterations, more agents, and more experiments for the same budget. And the open-source license means no vendor lock-in — a consideration that enterprise procurement teams are increasingly weighing.

Pricing Breakdown: Where Your Money Actually Goes

I think pricing gets hand-waved in most AI model comparisons. It shouldn't. For any team running production workloads, cost per resolved ticket or cost per shipped feature is what actually determines which model wins.

ModelInput (per M tokens) Output (per M tokens) Context windowOpen source?

GPT-5.3-Codex $10.00$ 30.00400K

Claude Opus 4.6 $5.00$25.001M (beta)

Claude Sonnet 4.6 $3.00$15.001M (beta)

Kimi K2.5 $0.60$2.50256KYes (modified MIT)

Let me make this concrete. Say you're running 1,000 coding tasks per month, each averaging 50K input tokens and 10K output tokens.

GPT-5.3-Codex: $800/month

Claude Opus 4.6: $500/month

Claude Sonnet 4.6: $300/month

Kimi K2.5: $55/month

That's a 14.5× cost difference between Codex and Kimi for the same volume. Even if Kimi needs twice the iterations to get the same result quality, you're still spending less than a quarter of what Codex costs.

But raw token pricing isn't everything. Codex uses fewer output tokens for equivalent results — OpenAI explicitly highlights this as a differentiator. And Opus 4.6's 1M context window means you can load an entire codebase once rather than chunking it across multiple API calls, which reduces total token consumption for large-project work.

The Andreessen Horowitz data adds important context: OpenAI's share of enterprise AI spending is shrinking — from 62% in 2024 to a projected 53% in 2026. Anthropic and open-source alternatives are eating into that lead. Price is a big reason why.

Which Model Should You Actually Use?

After two weeks of testing and research, here's my honest recommendation matrix. No single model wins across the board.

Choose GPT-5.3-Codex if you:

Do heavy terminal/CLI work (deployment, infrastructure, DevOps)

Need the fastest inference for interactive pair programming

Want a single model that handles coding and general professional tasks

Are already in the ChatGPT/Codex ecosystem with paid plans

Care most about multi-file coordination across long, complex sessions

Choose Claude Opus 4.6 if you:

Work with massive codebases that benefit from the 1M token window

Need AI for mixed workflows: coding + financial analysis + legal review + documentation

Want multi-agent coordination (Agent Teams) for large-scale projects

Prioritize abstract reasoning and novel problem-solving

Are building production agents that need superior computer-use capability (OSWorld lead)

Choose Kimi K2.5 if you:

Are cost-sensitive and running high-volume coding workloads

Want open-source flexibility to self-host and fine-tune

Need massive parallel execution (Agent Swarm with 100 sub-agents)

Do frontend/visual coding from mockups to functional code

Want to avoid vendor lock-in with proprietary APIs

The move I'd personally make: Start with Claude Sonnet 4.6 as your daily driver ($3/$15 per million tokens, 79.6% SWE-bench, 72.5% OSWorld). Route to Opus 4.6 for complex reasoning tasks. Use GPT-5.3-Codex specifically for terminal-heavy agentic workflows. And run Kimi K2.5 for high-volume batch operations where cost matters more than marginal quality differences.

Model routing — using different models for different tasks — is the actual winning strategy in 2026. Anyone telling you to go all-in on one model is selling you something.

The Bigger Story: What This Competition Means for Developers

The February 5 showdown wasn't just dramatic. It was informative.

The benchmark gap is narrowing. SWE-bench Verified has the top 5 models clustered within 4 percentage points. Terminal-Bench shows a bigger spread, but that's one benchmark. On most coding evaluations, we're entering diminishing returns territory — where the difference between models matters less than the difference in how you use them.

The real differentiation is shifting to ecosystem. Codex has the ChatGPT app, CLI, IDE extensions, and Cerebras-powered Spark for real-time. Opus has Claude Code, Agent Teams, 1M context, and new Excel/PowerPoint integrations via MCP. Kimi has Kimi Code CLI, Agent Swarm, and ClawHub with 5,000+ community skills. The model is becoming a commodity. The platform is where the value accrues.

Enterprise spending is exploding and diversifying simultaneously. That a16z data — $7 million average enterprise spend in 2025, projected $11.6 million in 2026 — confirms the market is real. But OpenAI's enterprise wallet share is dropping from 62% to 53%. Companies aren't consolidating on one provider. They're multi-modeling.

Self-improving AI is no longer theoretical. GPT-5.3-Codex debugging its own training process is a milestone that matters beyond benchmarks. OpenAI reports their engineering teams are "working fundamentally differently than two months ago." Whether this excites or concerns you probably depends on your relationship with job security. But either way, the genie is out.

I keep coming back to what DataCamp wrote in their analysis: being optimized for speed and autonomous creation, GPT-5.3-Codex takes a fundamentally different approach than Claude Opus 4.6. These aren't two versions of the same product anymore. They're diverging into different visions of what an AI coding tool should be. Codex is becoming a fast, autonomous agent that builds and ships. Opus is becoming a deep, thoughtful collaborator that reasons through complexity. Kimi is becoming the affordable workhorse that scales through parallelism.

The best developers in 2026 won't pick a side. They'll use all three.

FAQ

What are the exact benchmark scores for GPT-5.3-Codex vs Claude Opus 4.6?

On Terminal-Bench 2.0, GPT-5.3-Codex scores 77.3% versus Claude Opus 4.6's 65.4% — a 12-point lead. On SWE-Bench Pro (Public), Codex scores 56.8%. On OSWorld-Verified (computer use), Opus 4.6 leads with 72.7% vs Codex's 64.7%. On SWE-bench Verified, Opus 4.6 scores 80.8%. Both were released within 30 minutes of each other on February 5, 2026.

How much cheaper is Kimi K2.5 compared to GPT-5.3-Codex and Claude Opus 4.6?

Kimi K2.5's API pricing is $0.60 per million input tokens and $2.50 per million output tokens. That's approximately 17× cheaper than GPT-5.3-Codex ($10/$30) and 8× cheaper than Claude Opus 4.6 ($5/$25) on input tokens. Kimi K2.5 is also open-source under a modified MIT license, allowing free self-hosting.

Can GPT-5.3-Codex really build itself?

Yes. OpenAI confirmed that early versions of GPT-5.3-Codex were used to debug its own training runs, manage deployment infrastructure, diagnose evaluation failures, and dynamically scale GPU clusters during launch. The New Stack described it as "a first step in having models build and improve themselves." It's the first commercially released model known to have meaningfully contributed to its own development.

What is Claude Opus 4.6's Agent Teams feature?

Agent Teams enables multi-agent Claude Code orchestration where multiple sub-agents coordinate autonomously on different parts of a project via git worktrees. In a demonstration, 16 agents built a working C compiler from scratch — 100,000 lines of code that boots Linux on three CPU architectures — in approximately two weeks. It ships as a research preview.

Should I use Claude Sonnet 4.6 instead of Opus 4.6 for coding?

For most developers, yes. Claude Sonnet 4.6 scores 79.6% on SWE-bench (vs Opus's 80.8%) and 72.5% on OSWorld (vs 72.7%) at $3/$15 per million tokens — 5× cheaper than Opus. Anthropic reports 70% of users prefer Sonnet 4.6 over Sonnet 4.5, and 59% prefer it over the older Opus 4.5. Unless you need maximum reasoning depth or the highest abstract reasoning scores, Sonnet 4.6 is the better value.

What is Kimi K2.5's Agent Swarm and how is it different?

Agent Swarm allows Kimi K2.5 to coordinate up to 100 AI sub-agents working in parallel, compared to Claude's 16. It uses Parallel-Agent Reinforcement Learning (PARL) to train an orchestrator that breaks tasks into parallelizable subtasks. Moonshot reports 4.5× faster execution for complex workflows vs sequential processing. On BrowseComp (agentic search), Agent Swarm achieves 78.4% vs 60.6% for standard single-agent execution.

Which AI coding model is best for enterprise use in 2026?

It depends on your specific workflow. Claude Opus 4.6 leads enterprise knowledge work (GDPval-AA: 1606 Elo), legal reasoning (BigLaw: 90.2%), and has the largest context window (1M tokens). GPT-5.3-Codex leads terminal-based coding (Terminal-Bench: 77.3%) and runs 25% faster. Kimi K2.5 offers the best price-performance ratio at 10× lower cost. Most enterprises in 2026 are multi-modeling — using different models for different task types.

Is the coding AI benchmark race becoming meaningless?

Partially. On SWE-bench Verified, the top models are clustered within 4 percentage points —suggesting diminishing returns on this specific benchmark. However, Terminal-Bench 2.0 and OSWorld still show meaningful gaps (12+ points between leaders). The real differentiation is shifting to ecosystem, pricing, context window size, and multi-agent capabilities rather than raw benchmark scores.

Learn Generative AI in 2026: Build Real Apps with Build Fast with AI

Want to master the entire AI agent stack

GenAI Launchpad (2026 Edition) by Build Fast with AI offers:

✅ 100+ hands-on tutorials covering LLMs, agents, and AI workflows

✅ 30+ production templates including Kimi-powered applications

✅ Weekly live workshops with Satvik Paramkusham (IIT Delhi alumnus)

✅ Certificate of completion recognized across APAC

✅ Lifetime access to all updates and materials

Trusted by 12,000+ learners in India and APAC.

8-week intensive program that takes you from beginner to deploying production AI agents.

👉 Enroll in GenAI Launchpad Now

Connect with Build Fast with AI

Website: buildfastwithai.com

LinkedIn: Build Fast with AI

Instagram: @buildfastwithai