GLM-5 Is Here: Zhipu AI's 744B Open-Source Beast That's Closing the Gap With Claude and GPT-5

The open-source AI race just got a serious new contender.

Zhipu AI has officially launched GLM-5, a 744 billion parameter mixture-of-experts model (40B active) that's purpose-built for complex systems engineering and long-horizon agentic tasks. And the kicker? It's fully open-source under the MIT License — the most permissive license in AI.

GLM-5 doesn't just improve on its predecessor GLM-4.7. It goes head-to-head with Claude Opus 4.5, GPT-5.2, and Gemini 3.0 Pro across reasoning, coding, and agent benchmarks — and in several categories, it wins outright.

Here's everything you need to know.

What Is GLM-5?

GLM-5 is Zhipu AI's most powerful foundation model to date. While its predecessor GLM-4.5 introduced the company's approach to reasoning, coding, and agent tasks, GLM-5 takes a deliberate step further — targeting complex systems engineering and long-horizon agentic workflows that require sustained planning, execution, and adaptation over extended periods.

Think of it this way: most AI models are good at answering questions. GLM-5 is designed to do work — the kind of multi-step, multi-tool, long-running tasks that professionals actually face in engineering, development, and business operations.

The model is available for free on Z.ai, through the developer API at api.z.ai, and as downloadable weights on both Hugging Face and ModelScope.

GLM-5 by the Numbers: Scale and Architecture

GLM-5 represents a massive scale-up from the previous generation. Here's how the architecture has evolved:

Model architecture:

Total parameters: 744 billion (up from 355B in GLM-4.5)

Active parameters: 40 billion (up from 32B in GLM-4.5)

Architecture: Mixture-of-Experts (MoE)

Pre-training data: 28.5 trillion tokens (up from 23T in GLM-4.5)

Attention mechanism: Integrates DeepSeek Sparse Attention (DSA) for reduced deployment cost while preserving long-context capacity

License: MIT (fully open-source, commercial use permitted)

Training infrastructure breakthrough:

One of the most significant technical contributions behind GLM-5 is Slime — a novel asynchronous reinforcement learning infrastructure developed by Zhipu AI. Traditional RL training for large language models is notoriously inefficient at scale. Slime substantially improves training throughput and efficiency, enabling more fine-grained post-training iterations.

This matters because reinforcement learning is what bridges the gap between a model that's competent (from pre-training) and one that's excellent (from post-training alignment and optimization). By making RL more efficient, Zhipu could iterate faster and push GLM-5's capabilities further than brute-force scaling alone would allow.

Benchmark Results: How GLM-5 Stacks Up

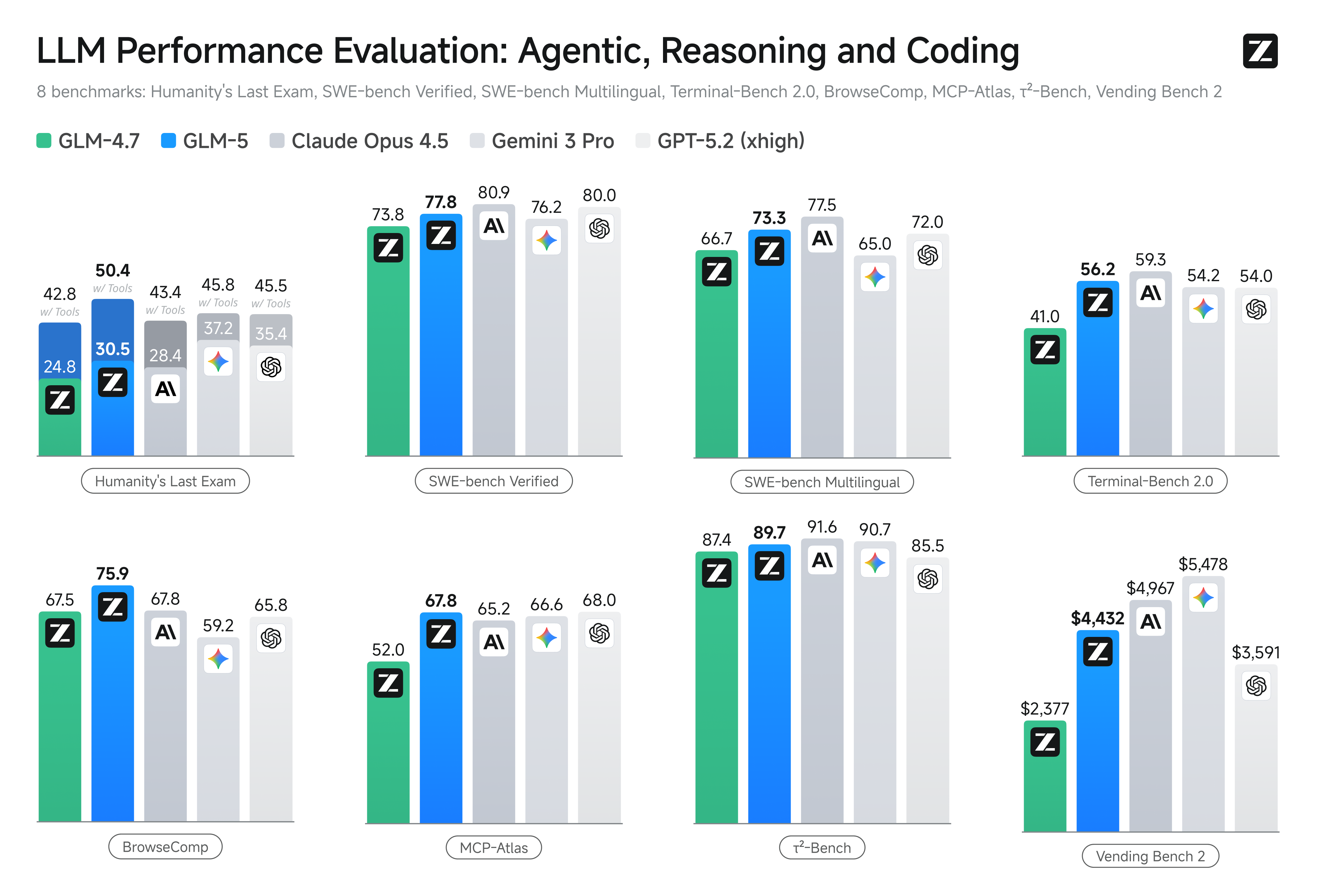

Here's where things get interesting. GLM-5 doesn't just beat other open-source models — it competes directly with the best proprietary models from OpenAI, Anthropic, and Google.

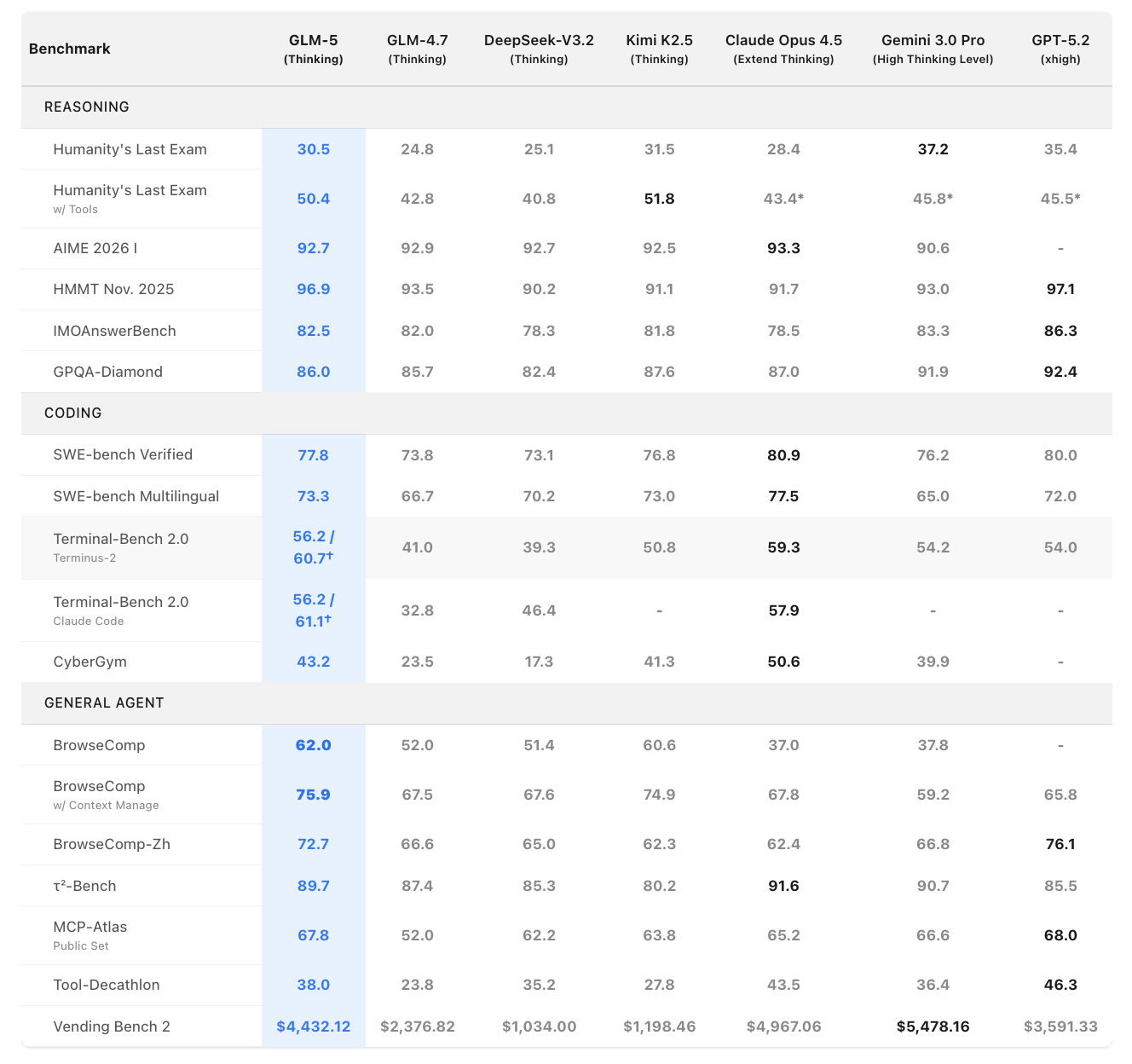

Reasoning Performance

Humanity's Last Exam: GLM-5 scores 30.5 (with tools: 50.4), outperforming Claude Opus 4.5 (28.4 / 43.4) and GPT-5.2 (35.4 / 45.5) in the tool-augmented variant

AIME 2026 I: Scores 92.7, essentially matching Claude Opus 4.5 (93.3) and beating Gemini 3.0 Pro (90.6)

HMMT Nov. 2025: Scores 96.9, the highest among all tested models including GPT-5.2 (97.1 being the only close competitor)

GPQA-Diamond: Scores 86.0, competitive with Claude Opus 4.5 (87.0) though below Gemini 3.0 Pro (91.9)

Coding Performance

SWE-bench Verified: GLM-5 hits 77.8%, making it the #1 open-source model on this benchmark, though Claude Opus 4.5 (80.9%) and GPT-5.2 (80.0%) still lead among proprietary models

SWE-bench Multilingual: Scores 73.3%, again #1 in open-source, trailing only Claude Opus 4.5 (77.5%)

Terminal-Bench 2.0: Scores 56.2 (verified: 60.7), approaching Claude Opus 4.5's 59.3 and beating GPT-5.2's 54.0

CyberGym: Scores 43.2, a massive jump from GLM-4.7's 23.5, though still behind Claude Opus 4.5 (50.6)

General Agent Performance

BrowseComp: GLM-5 scores 62.0, dramatically ahead of Claude Opus 4.5 (37.0) and Gemini 3.0 Pro (37.8)

BrowseComp with Context Management: Hits 75.9, the highest among all models including GPT-5.2 (65.8)

τ²-Bench: Scores 89.7, strong but trailing Claude Opus 4.5 (91.6) and Gemini 3.0 Pro (90.7)

Vending Bench 2: Finishes with $4,432 — #1 among open-source models and approaching Claude Opus 4.5's $4,967

Agentic Capabilities: Where GLM-5 Really Shines

The most compelling story about GLM-5 isn't any single benchmark — it's the agentic capability gap it closes.

Vending Bench 2: A Year of AI Business Management

Vending Bench 2 is one of the most interesting benchmarks in AI right now. It doesn't test whether a model can answer trivia or write code snippets. Instead, it asks the model to run a simulated vending machine business over an entire year — making purchasing decisions, managing inventory, handling pricing, and optimizing for profit over a long time horizon.

GLM-5 finishes with a final account balance of $4,432.12, compared to:

Claude Opus 4.5: $4,967.06

Gemini 3.0 Pro: $5,478.16

GPT-5.2: $3,591.33

DeepSeek-V3.2: $1,034.00

Kimi K2.5: $1,198.46

For an open-source model to come this close to the best proprietary systems on a long-horizon planning task is genuinely unprecedented.

BrowseComp: Web Browsing Dominance

On BrowseComp — which tests a model's ability to navigate the web, find information, and synthesize results — GLM-5 scores 62.0, nearly doubling Claude Opus 4.5's score of 37.0. With context management enabled, it hits 75.9, the highest of any model tested.

This suggests GLM-5 has particularly strong capabilities in real-world information retrieval and multi-step web tasks — exactly the kind of thing that matters when you're building AI agents that need to operate autonomously.

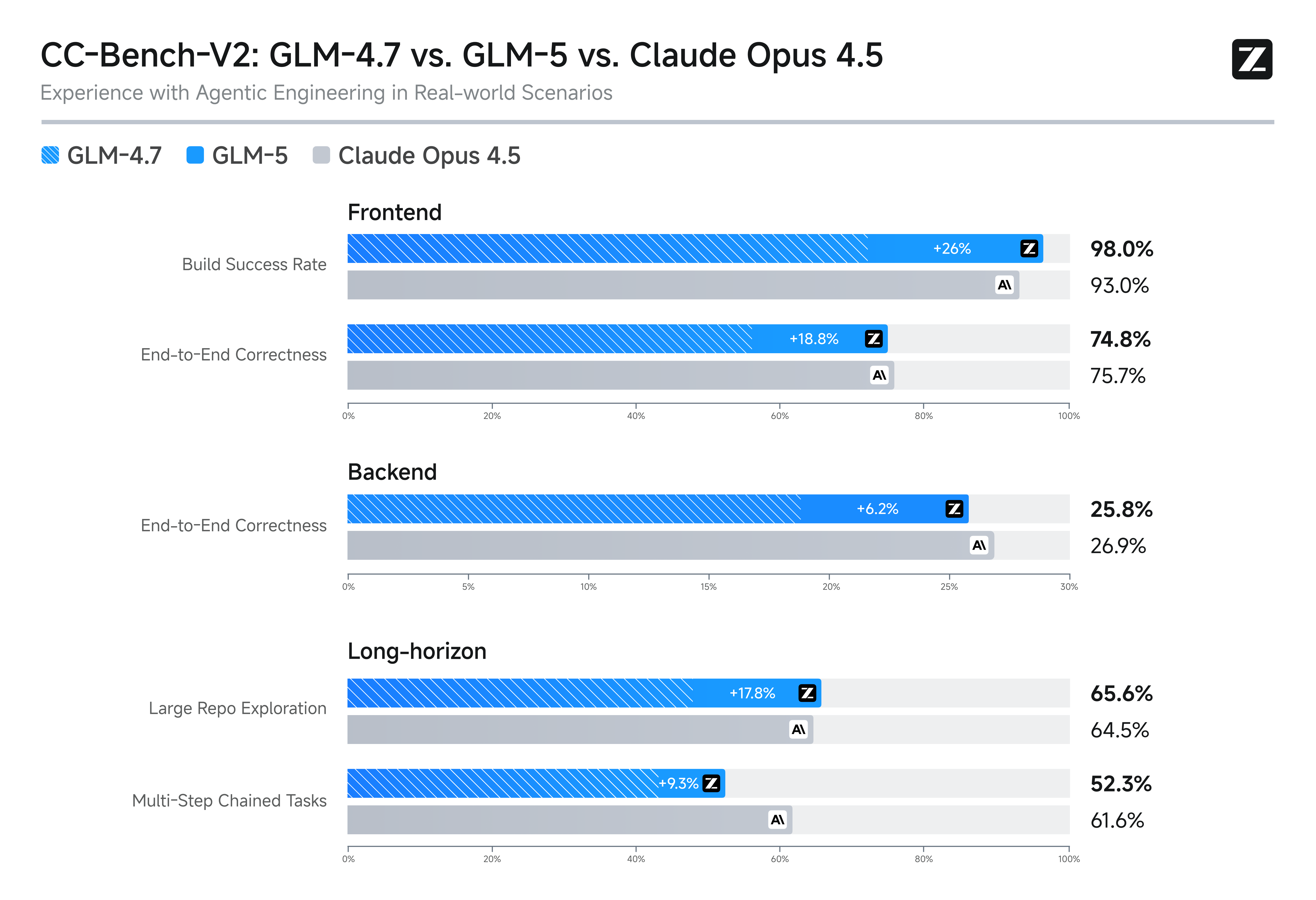

CC-Bench-V2: Systems Engineering

On Zhipu's internal evaluation suite CC-Bench-V2, GLM-5 significantly outperforms GLM-4.7 across frontend, backend, and long-horizon tasks, narrowing the gap to Claude Opus 4.5. This benchmark specifically measures complex software engineering — not isolated coding puzzles, but real systems work involving multiple files, dependencies, and architectural decisions.

From Chat to Work: GLM-5's Office Integration

Here's an angle that doesn't get enough attention: Zhipu AI is explicitly positioning GLM-5 as a productivity model, not just a chat model.

The company's official application, Z.ai, is rolling out an Agent mode with built-in skills for creating professional documents. GLM-5 can turn text or source materials directly into:

.docx files — PRDs, lesson plans, reports, run sheets

.pdf files — Formatted, ready-to-share documents

.xlsx files — Spreadsheets, financial reports, data analysis

This isn't just "generate text and paste it into Word." GLM-5 produces end-to-end, ready-to-use documents with proper formatting, layouts, and structure. It supports multi-turn collaboration, meaning you can refine outputs iteratively rather than starting from scratch each time.

Zhipu frames this as the natural evolution of foundation models: moving from "chat" to "work" — much like how Office tools serve knowledge workers and programming tools serve engineers.

Open-Source Under MIT License: Why That Matters

GLM-5 is released under the MIT License — the most permissive widely-used open-source license available. Here's why that's a big deal:

What MIT License means for you:

Full commercial use — build products, sell services, no restrictions

Modify freely — fine-tune, distill, adapt for your specific use case

No copyleft obligations — you don't have to open-source your own code

Minimal legal overhead — just include the copyright notice

Compare this to models released under more restrictive licenses (like Meta's Llama license or some "open-weight" models with commercial use limitations), and the value proposition becomes clear. GLM-5 gives enterprises and developers maximum flexibility to build on top of a genuinely frontier-class model.

Where to access the weights:

Hugging Face: Full model weights available for download

ModelScope: Alternative download for developers in China and Asia

API access: api.z.ai and BigModel.cn

Compatibility: Works with Claude Code and OpenClaw

How to Try GLM-5 Right Now

Zhipu AI has made GLM-5 accessible through multiple channels:

Free web access: Z.ai — try the model immediately in your browser, no API key needed

Developer API: api.z.ai — integrate GLM-5 into your applications with standard API calls

Download weights: Available on Hugging Face and ModelScope under MIT License

Tool compatibility: Works with Claude Code and OpenClaw for agentic coding workflows

The Big Picture: What GLM-5 Means for the AI Industry

GLM-5's release marks a significant moment in the open-source AI landscape for three reasons.

First, the performance gap is narrowing fast. An open-source model scoring within striking distance of Claude Opus 4.5 and GPT-5.2 on coding and agentic benchmarks would have been unthinkable a year ago. GLM-5 doesn't beat the proprietary frontier everywhere, but it's close enough that the calculus is changing for enterprises weighing build-vs-buy decisions.

Second, the focus on agentic capabilities signals where the industry is heading. GLM-5 isn't optimized for chatbot conversations or creative writing. It's optimized for doing work — running businesses, engineering software systems, managing long-horizon tasks. This is the model you'd pick to build an AI employee, not an AI assistant.

Third, the MIT License sets a new standard for open-source AI. As the most permissive license attached to a model of this scale and capability, it removes virtually every barrier to adoption. Enterprises that were hesitant about legal risk with other "open" models now have a genuinely unrestricted option at the frontier.

The message from Zhipu AI is clear: the era of open-source models being a generation behind proprietary ones is ending. And that changes everything.

Frequently Asked Questions

What is GLM-5?

GLM-5 is Zhipu AI's latest and most powerful foundation model, featuring 744 billion total parameters with 40 billion active parameters in a mixture-of-experts architecture. It is designed specifically for complex systems engineering and long-horizon agentic tasks, and it is fully open-source under the MIT License.

How does GLM-5 compare to Claude Opus 4.5?

GLM-5 approaches Claude Opus 4.5 across most benchmarks. It outperforms Claude Opus 4.5 on BrowseComp (62.0 vs 37.0) and Humanity's Last Exam with tools (50.4 vs 43.4), while Claude leads on SWE-bench Verified (80.9 vs 77.8) and Vending Bench 2 ($4,967 vs $4,432). GLM-5 is the strongest open-source competitor to Claude Opus 4.5.

How does GLM-5 compare to GPT-5.2?

GLM-5 beats GPT-5.2 on several benchmarks including BrowseComp with context management (75.9 vs 65.8), Vending Bench 2 ($4,432 vs $3,591), and Terminal-Bench 2.0 (56.2 vs 54.0). GPT-5.2 leads on GPQA-Diamond (92.4 vs 86.0) and SWE-bench Verified (80.0 vs 77.8).

Is GLM-5 open-source?

Yes. GLM-5 is fully open-source under the MIT License, which is the most permissive widely-used open-source license. It allows full commercial use, modification, and redistribution with no copyleft obligations. Model weights are available on Hugging Face and ModelScope.

What is Vending Bench 2 and why does it matter?

Vending Bench 2 is a benchmark that measures an AI model's long-term operational capability by requiring it to run a simulated vending machine business over a full year. GLM-5 achieves a final balance of $4,432, ranking #1 among all open-source models and approaching Claude Opus 4.5's $4,967.

How can I try GLM-5 for free?

You can try GLM-5 for free at Z.ai in your browser with no API key required. Developers can access it through the API at api.z.ai, and model weights are available for download on Hugging Face and ModelScope.

What is the Slime RL infrastructure?

Slime is a novel asynchronous reinforcement learning infrastructure developed by Zhipu AI specifically for training GLM-5. It substantially improves RL training throughput and efficiency compared to traditional approaches, enabling more fine-grained post-training iterations that helped push GLM-5's capabilities beyond what pre-training scale alone could achieve.

What document types can GLM-5 create?

Through the Z.ai Agent mode, GLM-5 can generate end-to-end professional documents including Word files (.docx), PDFs, and Excel spreadsheets (.xlsx). It supports creating PRDs, lesson plans, exams, financial reports, menus, run sheets, and more — with proper formatting delivered as ready-to-use files.

Learn Generative AI in 2026: Build Real Apps with Build Fast with AI

Want to master the entire AI agent stack, not just OpenClaw?

GenAI Launchpad (2026 Edition) by Build Fast with AI offers:

✅ 100+ hands-on tutorials covering LLMs, agents, and AI workflows

✅ 30+ production templates including Kimi-powered applications

✅ Weekly live workshops with Satvik Paramkusham (IIT Delhi alumnus)

✅ Certificate of completion recognized across APAC

✅ Lifetime access to all updates and materials

Trusted by 12,000+ learners in India and APAC.

8-week intensive program that takes you from beginner to deploying production AI agents.

👉 Enroll in GenAI Launchpad Now

Connect with Build Fast with AI

Website: buildfastwithai.com

LinkedIn: Build Fast with AI

Instagram: @buildfastwithai

![GLM-5 Released: 744B Open-Source Model Beats GPT-5.2 on Key Benchmarks [2026]](/_next/image?url=https%3A%2F%2Foukdqujzonxvqhiefdsv.supabase.co%2Fstorage%2Fv1%2Fobject%2Fpublic%2Fblogs%2Fbab7a50d-edc0-4449-9171-8727c982d377.jpg&w=2048&q=75)