The best time to start with AI was yesterday. The second best time? Right after reading this post. The fastest way? Gen AI Launch Pad’s 6-week transformation.

Join Build Fast with AI’s Gen AI Launch Pad 2025 - your accelerated path to mastering AI tools and building revolutionary applications.

Introduction

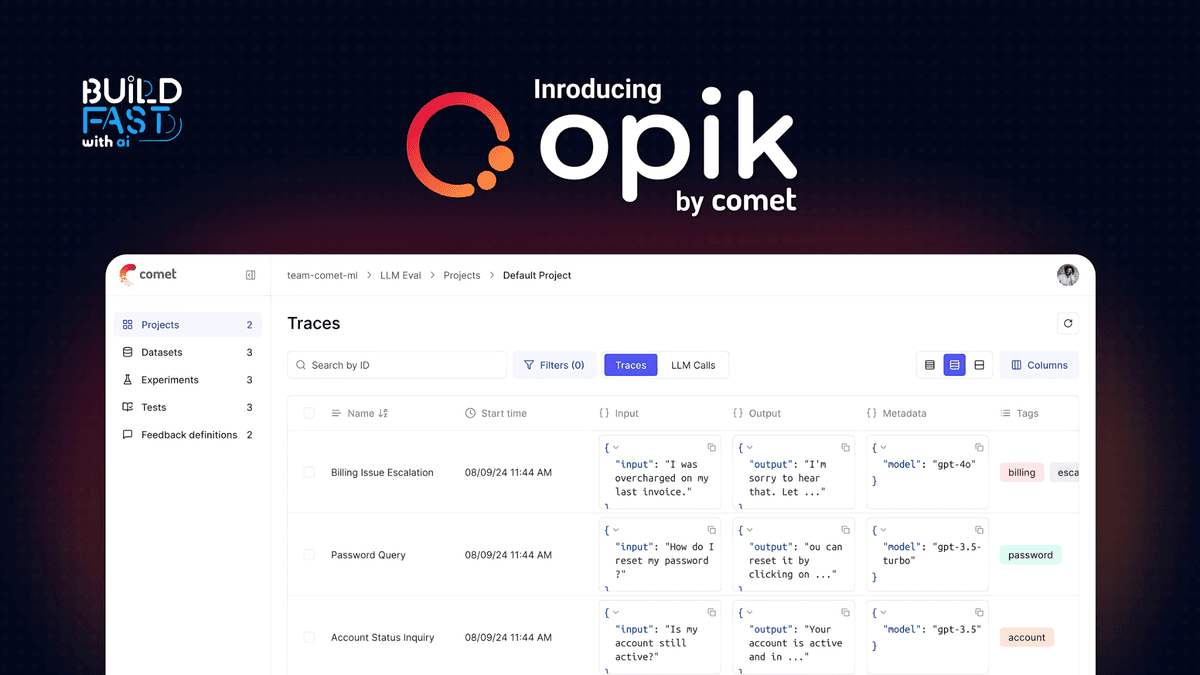

In the era of AI-driven innovation, Large Language Models (LLMs) have become essential tools for diverse applications, ranging from content generation to complex decision-making. However, ensuring the reliability, performance, and accuracy of LLMs in production environments remains a challenge. Enter Opik: an open-source platform developed by Comet to evaluate, test, and monitor LLM applications effectively.

In this blog post, we will explore how to use Opik for tracing, evaluation, and production monitoring of LLMs. By the end, you’ll have a thorough understanding of its features, setup, and practical applications. Additionally, we will dive deep into its key metrics and how they can provide actionable insights to improve your LLM workflows.

Key Features of Opik

- Tracing: Tracks all LLM calls during development and production, enabling better debugging and analysis.

- Evaluation: Automates the evaluation process using datasets and experiments, providing insights into model performance.

- Production Monitoring: Logs production traces and tracks key metrics like feedback scores, trace counts, and token usage over time.

1. Setup and Installation

Getting started with Opik is straightforward. With just a few commands, you can have the platform ready to enhance your LLM workflows.

Code:

%pip install --upgrade opik openai import opik opik.configure(use_local=False)

Explanation:

- The first line ensures the latest versions of

opikandopenailibraries are installed. opik.configure(use_local=False)sets up Opik to operate without relying on local configurations, enabling cloud-based integration.

Application:

Use this setup in any Python environment, such as Jupyter Notebook or Google Colab, to get started with Opik.

2. Preparing the Environment

To ensure smooth interaction with LLMs, Opik requires an OpenAI API key. The following script securely configures your environment.

Code:

from google.colab import userdata

import os

import getpass

os.environ["OPENAI_API_KEY"] = userdata.get("OPENAI_API_KEY")

if "OPENAI_API_KEY" not in os.environ:

os.environ["OPENAI_API_KEY"] = getpass.getpass("Enter your OpenAI API key: ")

Explanation:

userdata.get("OPENAI_API_KEY")retrieves the API key stored in your Google Colab environment.- If the API key is unavailable,

getpassprompts the user to enter it manually. os.environstores the API key as an environment variable for secure access.

Application:

This setup is crucial for environments where OpenAI’s API is used, ensuring secure and consistent access.

3. Logging Traces

Opik’s tracing functionality records all interactions with the LLM, making it easier to analyze and debug outputs. Let’s explore how to enable and utilize this feature.

Code:

from opik.integrations.openai import track_openai

from openai import OpenAI

os.environ["OPIK_PROJECT_NAME"] = "openai-integration-demo"

client = OpenAI()

openai_client = track_openai(client)

prompt = """

Write a short two-sentence story about Opik.

"""

completion = openai_client.chat.completions.create(

model="gpt-4o", messages=[{"role": "user", "content": prompt}]

)

print(completion.choices[0].message.content)

Expected Output:

Opik was a mischievous kitten who loved to explore and play in the garden all day long. One day, he got his paws on a bird feather and proudly brought it to his humans as a gift.

Explanation:

track_openai(client)integrates Opik’s tracing capabilities with the OpenAI client.- The

promptandcompletionobjects simulate a typical interaction with an LLM. - All traces are logged under the project name "openai-integration-demo" for further analysis.

Application:

Use this feature to debug, track, and analyze how your LLM responds to various inputs. Developers can use trace logs to understand input-output relationships and fine-tune their models.

4. Enhancing Functionality with Decorators

The @track decorator simplifies logging for functions interacting with LLMs, automating trace capture for function calls and outputs.

Code:

from opik import track

from opik.integrations.openai import track_openai

from openai import OpenAI

os.environ["OPIK_PROJECT_NAME"] = "openai-integration-demo"

client = OpenAI()

openai_client = track_openai(client)

@track

def generate_story(prompt):

res = openai_client.chat.completions.create(

model="gpt-4o", messages=[{"role": "user", "content": prompt}]

)

return res.choices[0].message.content

@track

def generate_topic():

prompt = "Generate a topic for a story about Opik."

res = openai_client.chat.completions.create(

model="gpt-4o", messages=[{"role": "user", "content": prompt}]

)

return res.choices[0].message.content

@track

def generate_opik_story():

topic = generate_topic()

story = generate_story(topic)

return story

generate_opik_story()

Expected Output:

OPIK: Started logging traces to the "openai-integration-demo" project. Opik was a young boy with a wild imagination and a thirst for adventure. He loved to roam the forests...

Explanation:

- The

@trackdecorator simplifies trace logging by automatically capturing function inputs, outputs, and execution details. - Functions like

generate_storyandgenerate_topicshowcase how modularization can enhance reusability and clarity.

Application:

Ideal for modular applications where multiple functions interact with LLMs. Use this to maintain clean, traceable code that is easy to debug and extend.

5. Evaluating LLM Outputs

Opik provides robust metrics to assess the quality of LLM responses. Here are some examples:

Example Metric: Hallucination

from opik.evaluation.metrics import Hallucination

metric = Hallucination()

score = metric.score(

input="What is the capital of France?",

output="Paris",

context=["France is a country in Europe."]

)

print(score)

Expected Output:

ScoreResult(name='hallucination_metric', value=0.0, reason="['The OUTPUT is a well-established fact and aligns with general knowledge...', 'Paris is the known capital of France...']")

Explanation:

- The hallucination metric evaluates if the LLM output introduces inaccuracies or fabrications.

- In this case, the output "Paris" aligns with general knowledge and context, resulting in a low hallucination score.

Application:

Use this metric to ensure the factual correctness of LLM-generated content, especially in high-stakes domains like healthcare or legal advice.

Additional Metrics:

- Answer Relevance: Assesses how well the LLM’s response aligns with the input query.

- Context Precision: Evaluates the relevance and conciseness of the output concerning the provided context.

Conclusion

Opik is a powerful tool that bridges the gap between development and production for LLM applications. Its capabilities—ranging from trace logging to evaluation—empower developers to build reliable, efficient, and high-performing AI systems. By leveraging Opik’s metrics, developers can gain deeper insights into their models and optimize them for real-world applications.

To deepen your understanding, explore the following resources:

Resources

- Opik Documentation

- OpenAI API Documentation

- GitHub Repo for Opik

- Further Reading on LLM Monitoring

- OpenAI Tutorials

--------------

Stay Updated:- Follow Build Fast with AI pages for all the latest AI updates and resources.

Experts predict 2025 will be the defining year for Gen AI implementation.Want to be ahead of the curve?

Join Build Fast with AI’s Gen AI Launch Pad 2025 - your accelerated path to mastering AI tools and building revolutionary applications.