Are you ready to make your mark in the AI revolution?

Sign up for Gen AI Launch Pad 2024 and turn your ideas into reality. Be a pioneer, not a spectator.

Introduction

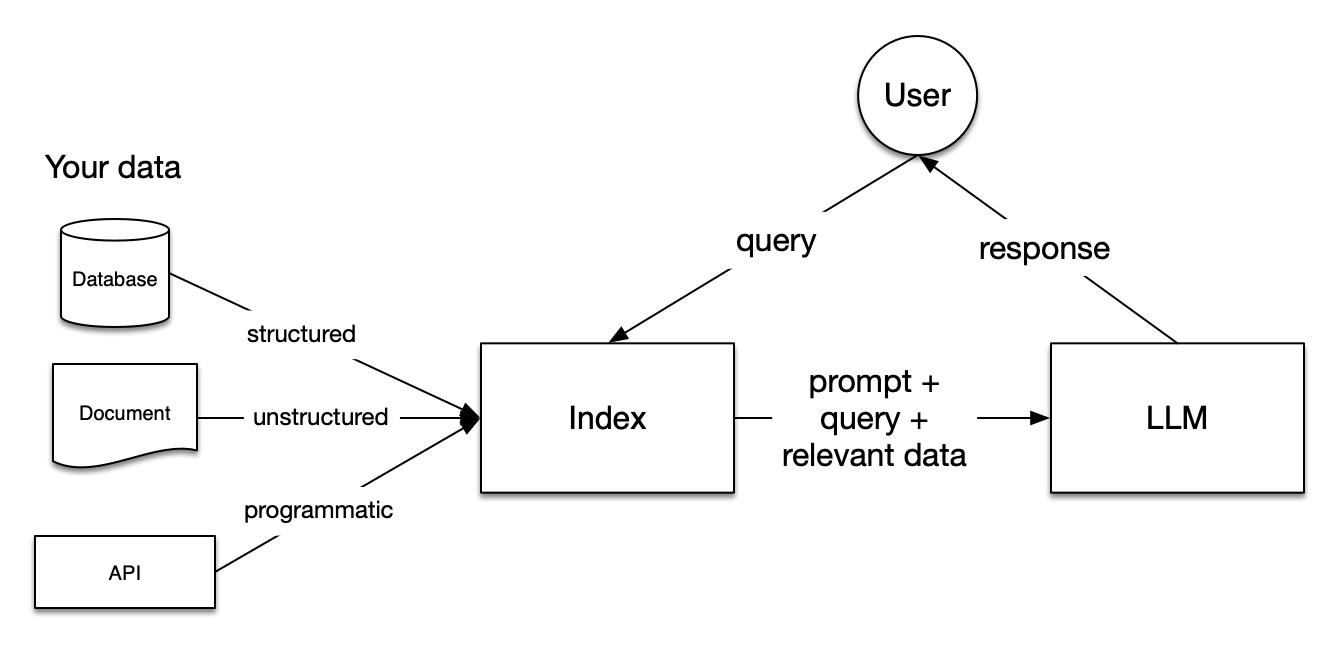

Language models, such as GPT and Mistral, have reshaped the landscape of artificial intelligence by enabling powerful text generation and understanding capabilities. However, their effectiveness often hinges on the quality and relevance of the data they access. Integrating external data sources, both structured and unstructured, remains a significant challenge. This is where LlamaIndex steps in.

LlamaIndex is a versatile Python library designed to bridge the gap between language models and data integration. By facilitating advanced retrieval-augmented generation (RAG) workflows, LlamaIndex enables developers to:

- Connect language models to diverse data sources (databases, APIs, documents).

- Index and transform data for optimized querying.

- Build intelligent applications like chatbots, knowledge retrieval systems, and search tools.

Understanding the Building Blocks

What is LlamaIndex?

At its core, LlamaIndex is a Python library that connects language models to external data sources. Its purpose is to simplify data querying, indexing, and integration tasks for applications powered by language models. By structuring and optimizing data retrieval processes, LlamaIndex makes it easier to build robust RAG workflows.

Key Features of LlamaIndex:

- Seamless Data Integration: Works with APIs, SQL databases, documents, and more.

- Optimized Querying: Structures data for efficient interaction with language models.

- Customizable Pipelines: Supports diverse applications from chatbot development to intelligent search.

Explore the Official LlamaIndex Documentation

What is Retrieval-Augmented Generation (RAG)?

RAG is a paradigm that combines retrieval mechanisms with generative capabilities. Instead of relying solely on a pre-trained language model, RAG systems:

- Query external data sources to retrieve relevant information.

- Combine retrieved data with the model's generative abilities to produce contextually accurate and insightful outputs.

LlamaIndex plays a pivotal role in enabling RAG workflows by indexing external data sources and facilitating efficient querying.

Why Use Mistral?

Mistral is a lightweight yet powerful language model optimized for efficiency and accuracy. Pairing Mistral with LlamaIndex creates a system capable of handling complex queries without the computational overhead of larger models like GPT-4.

Step-by-Step Guide: Building a RAG System with LlamaIndex and Mistral

Setup and Environment

Before diving into the code, ensure you have the necessary tools installed. Use the following commands to set up your environment:

pip install llama-index mistral

Importing Libraries

The first step is to import the required libraries:

from llama_index import SimpleIndex, Document from mistral import Mistral

Explanation:

lama_index: Provides the core functionality for indexing and querying data.SimpleIndex: A basic index structure for storing and retrieving documents.Document: Represents individual pieces of data to be indexed.Mistral: Represents the lightweight language model used for text generation.

Creating and Indexing Data

The next step is to create sample data and build an index.

# Sample data

documents = [

Document("LlamaIndex is a library for integrating language models with data."),

Document("Mistral is a lightweight language model optimized for performance."),

Document("RAG workflows combine retrieval mechanisms with generative AI capabilities."),

]

# Building the index

index = SimpleIndex.from_documents(documents)

Explanation:

- Sample Data: Here, we define a list of

Documentobjects, each containing a piece of information we want to index. SimpleIndex.from_documents: Creates an index from the provided documents, enabling efficient data retrieval.

Expected Output:

No immediate output is generated here, but the index object now contains the indexed documents for later querying.

Querying the Index

With the index built, you can query it for information relevant to a specific input.

# Querying the index query = "What is LlamaIndex?" response = index.query(query) print(response)

Explanation:

- Query: A user-provided question or input.

index.query(): Searches the indexed data for relevant information and returns a response.- Output: The response should contain information about LlamaIndex.

Expected Output:

"LlamaIndex is a library for integrating language models with data."

Enhancing the System with Mistral

Adding Mistral to the workflow enables advanced text generation capabilities.

# Generating text with Mistral

model = Mistral()

query = "Explain RAG workflows."

retrieved_info = index.query(query)

response = model.generate(f"Based on the following info: {retrieved_info}")

print(response)

Explanation:

model.generate(): Uses Mistral to generate a detailed response based on retrieved information.- Integration: Combines the retrieval capabilities of LlamaIndex with the generative power of Mistral.

Expected Output:

"RAG workflows combine retrieval mechanisms with generative AI capabilities to provide accurate and context-rich outputs."

LlamaIndex Flowchart

Real-World Applications

Real-World Applications

- Knowledge Retrieval Systems: Build AI assistants that can fetch and summarize information from documents.

- Custom Search Engines: Create search tools for specific domains like healthcare or legal documents.

- Enhanced Chatbots: Integrate contextual knowledge into chatbot conversations.

Conclusion

By combining LlamaIndex and Mistral, developers can build scalable and efficient RAG systems. These tools unlock new possibilities for integrating external data with language models, making AI applications smarter and more context-aware.

Key Takeaways:

- LlamaIndex simplifies data integration and querying.

- RAG workflows enhance the capabilities of language models by retrieving relevant context.

- Mistral provides an efficient generative backbone for producing high-quality outputs.

Resources

- LlamaIndex Documentation

- Mistral Official Site

- GitHub Repository for LlamaIndex

- Build Fast With AI LlamaIndex NoteBook

---------------------------------

Stay Updated:- Follow Build Fast with AI pages for all the latest AI updates and resources.

Experts predict 2025 will be the defining year for Gen AI implementation.Want to be ahead of the curve?

Join Build Fast with AI’s Gen AI Launch Pad 2025 - your accelerated path to mastering AI tools and building revolutionary applications.