Do you want to be remembered as someone who waited or someone who created?

Gen AI Launch Pad 2024 is your platform to innovate.

Introduction

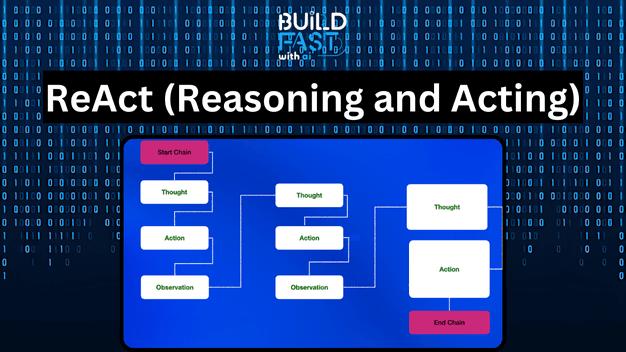

The ReAct (Reasoning and Acting) pattern is revolutionising how we build AI agents by combining reasoning with action-taking capabilities. In this article, we'll explore how to implement ReAct patterns in Python, comparing traditional approaches with modern frameworks like LangGraph. We'll build a practical example that helps users find and compare restaurant ratings — a task that showcases the power of structured reasoning in AI applications.

Understanding the ReAct Pattern

The ReAct pattern follows a simple yet powerful loop:

- Thought: The agent reasons about the current state and what needs to be done

- Action: The agent takes a specific action based on its reasoning

- Observation: The agent receives feedback from the action

- Repeat: The cycle continues until reaching a final answer

Traditional Implementation

Let's implement a restaurant rating comparison system using the traditional ReAct pattern:

import re

from openai import OpenAI

from dotenv import load_dotenv

_ = load_dotenv()

class RestaurantAgent:

def __init__(self, system=""):

self.system = system

self.messages = []

self.client = OpenAI()

if self.system:

self.messages.append({'role': 'system', 'content': system})

def __call__(self, message):

self.messages.append({'role': 'user', 'content': message})

result = self.execute()

self.messages.append({'role': 'assistant', 'content': result})

return result

def execute(self):

completion = self.client.chat.completions.create(

model='gpt-4',

temperature=0,

messages=self.messages

)

return completion.choices[0].message.content

def get_restaurant_rating(name):

ratings = {

"Pizza Palace": {"rating": 4.5, "reviews": 230},

"Burger Barn": {"rating": 4.2, "reviews": 185},

"Sushi Supreme": {"rating": 4.8, "reviews": 320}

}

return ratings.get(name, {"rating": 0, "reviews": 0})

known_actions = {

"get_rating": get_restaurant_rating

}

prompt = """

You run in a loop of Thought, Action, PAUSE, Observation.

At the end of the loop you output an Answer.

Use Thought to describe your reasoning about the restaurant comparison.

Use Action to run one of the actions available to you - then return PAUSE.

Observation will be the result of running those actions.

Your available actions are:

get_rating:

e.g. get_rating: Pizza Palace

Returns rating and review count for the specified restaurant

Example session:

Question: Which restaurant has better ratings, Pizza Palace or Burger Barn?

Thought: I should check the ratings for both restaurants

Action: get_rating: Pizza Palace

PAUSE

"""

def query(question, max_turns=5):

action_re = re.compile('^Action: (\w+): (.*)$')

bot = RestaurantAgent(prompt)

next_prompt = question

for i in range(max_turns):

result = bot(next_prompt)

print(result)

actions = [

action_re.match(a)

for a in result.split('\n')

if action_re.match(a)

]

if actions:

action, action_input = actions[0].groups()

if action not in known_actions:

raise Exception(f"Unknown action: {action}: {action_input}")

observation = known_actions[action](action_input)

next_prompt = f"Observation: {observation}"

else:

return

question = """which resturant have better rating, Pizza Palace or Burger Barn?"""

query(question)

Output:

Thought: I should check the ratings for both restaurants Action: get_rating: Pizza Palace PAUSE Thought: Now that I have the rating for Pizza Palace, I should get the rating for Burger Barn. Action: get_rating: Burger Barn PAUSE Thought: Pizza Palace has a rating of 4.5 based on 230 reviews, while Burger Barn has a rating of 4.2 based on 185 reviews. Therefore, Pizza Palace has a higher rating. Answer: Pizza Palace has a better rating.

This RestaurantAgent class forms the foundation of our implementation. Let's understand its key components:

- State Management: The agent maintains its state through the

messageslist, which keeps track of the entire conversation history. This is crucial for maintaining context throughout the interaction. - System Prompt: The

systemparameter allows us to define the agent's behavior and available actions. This is where we set up the ReAct pattern's structure of Thought, Action, and Observation.

The action system is implemented through:

- Action Functions: Each action (like

get_restaurant_rating) is a standalone function that performs a specific task. In a real-world application, these functions might query APIs or databases. - Action Registry: The

known_actionsdictionary serves as a registry of available actions, making it easy to add or modify capabilities.

The main query function implements the ReAct loop:

- Pattern Matching: Uses regular expressions to identify when the agent wants to take an action

- Action Execution: Extracts the action name and input, executes the action, and provides the result as an observation

- Loop Control: Continues until either reaching the maximum turns or finding no more actions to take

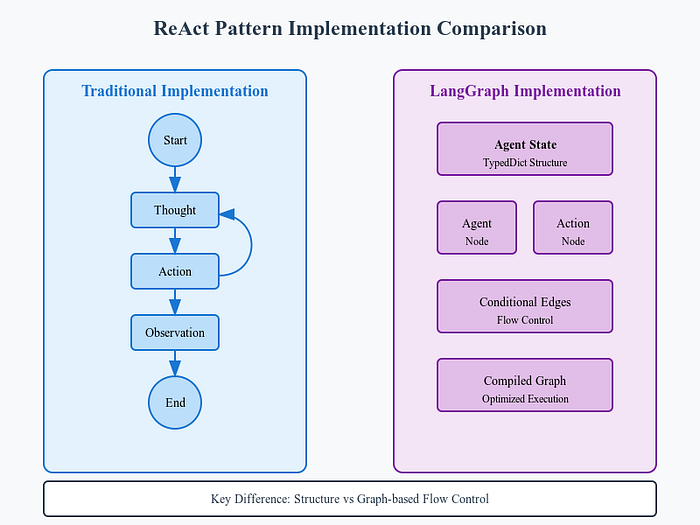

Modern Implementation with LangGraph

Now, let's implement the same functionality using LangGraph, which provides a more structured and maintainable approach:

from langgraph.graph import StateGraph, END

from typing import TypedDict, Annotated, List

import operator

from langchain_core.messages import (

AnyMessage,

SystemMessage,

HumanMessage,

ToolMessage,

AIMessage

)

from langchain_core.tools import Tool

from langchain_openai import ChatOpenAI

from dotenv import load_dotenv

import os

# Load environment variables properly

load_dotenv()

# Define a more structured prompt template with tool descriptions

prompt_template = """Answer the following questions as best you can. You have access to the following tools:

{tools}

Use the following format:

Question: the input question you must answer

Thought: you should always think about what to do

Action: the action to take, should be one of [{tool_names}]

Action Input: the input to the action

Observation: the result of the action

... (this Thought/Action/Action Input/Observation can repeat N times)

Thought: I now know the final answer

Final Answer: the final answer to the original input question

Begin!

Question: {input}

Thought: {agent_scratchpad}"""

class AgentState(TypedDict):

messages: Annotated[List[AnyMessage], operator.add]

class RestaurantTool:

def __init__(self):

self.name = "restaurant_rating"

self.description = "Get rating and review information for a restaurant"

def get_restaurant_rating(self, name: str) -> dict:

ratings = {

"Pizza Palace": {"rating": 4.5, "reviews": 230},

"Burger Barn": {"rating": 4.2, "reviews": 185},

"Sushi Supreme": {"rating": 4.8, "reviews": 320}

}

return ratings.get(name, {"rating": 0, "reviews": 0})

def __call__(self, name: str) -> str:

result = self.get_restaurant_rating(name)

return f"Rating: {result['rating']}/5.0 from {result['reviews']} reviews"

class Agent:

def __init__(self, model: ChatOpenAI, tools: List[Tool], system: str = ''):

self.system = system

self.tools = {t.name: t for t in tools}

# Create tool descriptions for the prompt

tool_descriptions = "\n".join(f"- {t.name}: {t.description}" for t in tools)

tool_names = ", ".join(t.name for t in tools)

# Bind tools to the model

self.model = model.bind_tools(tools)

# Initialize the graph

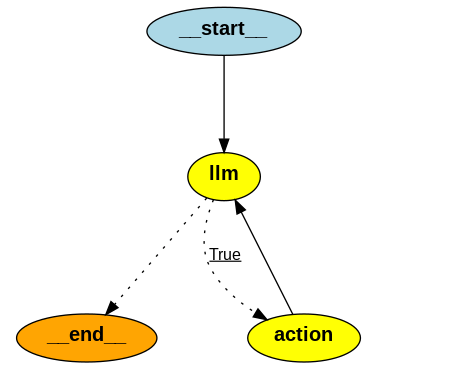

graph = StateGraph(AgentState)

# Add nodes and edges

graph.add_node("llm", self.call_llm)

graph.add_node("action", self.take_action)

# Add conditional edges

graph.add_conditional_edges(

"llm",

self.should_continue,

{True: "action", False: END}

)

graph.add_edge("action", "llm")

# Set entry point and compile

graph.set_entry_point("llm")

self.graph = graph.compile()

def should_continue(self, state: AgentState) -> bool:

"""Check if there are any tool calls to process"""

last_message = state["messages"][-1]

return hasattr(last_message, "tool_calls") and bool(last_message.tool_calls)

def call_llm(self, state: AgentState) -> AgentState:

"""Process messages through the LLM"""

messages = state["messages"]

if self.system and not any(isinstance(m, SystemMessage) for m in messages):

messages = [SystemMessage(content=self.system)] + messages

response = self.model.invoke(messages)

return {"messages": [response]}

def take_action(self, state: AgentState) -> AgentState:

"""Execute tool calls and return results"""

last_message = state["messages"][-1]

results = []

for tool_call in last_message.tool_calls:

tool_name = tool_call['name']

if tool_name not in self.tools:

result = f"Error: Unknown tool '{tool_name}'"

else:

try:

tool_result = self.tools[tool_name].invoke(tool_call['args'])

result = str(tool_result)

except Exception as e:

result = f"Error executing {tool_name}: {str(e)}"

results.append(

ToolMessage(

tool_call_id=tool_call['id'],

name=tool_name,

content=result

)

)

return {"messages": results}

def invoke(self, message: str) -> List[AnyMessage]:

"""Main entry point for the agent"""

initial_state = {"messages": [HumanMessage(content=message)]}

final_state = self.graph.invoke(initial_state)

return final_state["messages"]

# Create and configure the agent

def create_restaurant_agent() -> Agent:

model = ChatOpenAI(model="gpt-3.5-turbo", temperature=0)

# Create tool instance

restaurant_tool = RestaurantTool()

# Convert to LangChain Tool

tool = Tool(

name=restaurant_tool.name,

description=restaurant_tool.description,

func=restaurant_tool

)

# Create system prompt

system_prompt = prompt_template.format(

tools=tool.description,

tool_names=tool.name,

input="{input}",

agent_scratchpad="{agent_scratchpad}"

)

# Create and return agent

return Agent(model, [tool], system=system_prompt)

# Example usage

if __name__ == "__main__":

agent = create_restaurant_agent()

response = agent.invoke("""which resturant have better rating, Pizza Palace or Burger Barn?""")

for message in response:

print(f"{message.type}: {message.content}")he state management in LangGraph is more sophisticated:

Output:

human: which resturant have better rating, Pizza Palace or Burger Barn? ai: tool: Rating: 4.5/5.0 from 230 reviews tool: Rating: 4.2/5.0 from 185 reviews ai: Final Answer: Pizza Palace has a better rating with 4.5 out of 5.0 compared to Burger Barn's rating of 4.2 out of 5.0.

- Typed State: Using TypedDict provides type safety and clear documentation of the state structure

- Explicit State Components: Each piece of information has a designated place in the state

- Message History: Maintains a sequence of messages for context, similar to the traditional approach but with better structure

The node system in LangGraph offers several advantages:

- Functional Approach: Each node is a pure function that takes a state and returns a modified state

- Clear Responsibilities: Nodes have single responsibilities — either making decisions (agent_node) or performing actions (get_restaurant_rating_node)

- Immutable State Updates: The state is updated in a controlled manner, making the system more predictable

The graph construction showcases LangGraph's power:

- Explicit Flow: The graph structure makes the flow of control explicit and visual

- Conditional Routing: Using

add_conditional_edgesallows for complex decision-making about the next step - Compilation: The

compile()step optimizes the graph for execution

Key Differences and Benefits

- Structured Flow: LangGraph provides a more explicit structure through its graph-based approach, making the flow of logic clearer and more maintainable.

- State Management: LangGraph's typed state management helps catch errors early and makes the code more robust.

- Modularity: The graph-based approach makes it easier to add new capabilities or modify existing ones without changing the core logic.

- Visualization: LangGraph allows for easy visualization of the agent's decision-making process, which is valuable for debugging and explanation.

Use Cases and Applications

The ReAct pattern, whether implemented traditionally or with LangGraph, is particularly useful for:

- Customer service automation

- Data analysis workflows

- Information retrieval systems

- Decision-making processes

- Task automation

Conclusion

While the traditional ReAct pattern implementation offers simplicity and ease of understanding, LangGraph provides a more robust and scalable solution for production environments. The choice between the two approaches depends on your specific needs:

Choose the traditional approach for:

- Prototyping and learning

- Simple applications

- Quick implementations

Choose LangGraph for:

- Production applications

- Complex workflows

- Team-based development

- Systems requiring extensive monitoring and debugging

Both approaches demonstrate the power of combining reasoning with action in AI agents, leading to more capable and reliable automated systems.

Further Resources

---------------------------

Stay Updated:- Follow Build Fast with AI pages for all the latest AI updates and resources.

Experts predict 2025 will be the defining year for Gen AI implementation.Want to be ahead of the curve?

Join Build Fast with AI’s Gen AI Launch Pad 2025 - your accelerated path to mastering AI tools and building revolutionary applications.

---------------------------

Resources and Community

Join our community of 12,000+ AI enthusiasts and learn to build powerful AI applications! Whether you're a beginner or an experienced developer, this tutorial will help you understand and implement AI agents in your projects.

- Website: www.buildfastwithai.com

- LinkedIn: linkedin.com/company/build-fast-with-ai/

- Instagram: instagram.com/buildfastwithai/

- Twitter: x.com/satvikps

- Telegram: t.me/BuildFastWithAI